Itility visits AWS re:Invent in Las Vegas

Last Saturday our very own Kjeld, Patrick, and Teun flew to Las Vegas to attend the 2018 AWS re:Invent conference in search of new knowledge and technologies regarding AWS. Keep up to speed with their experiences and find out what they have learned on a day-to-day basis in this blog.

What is AWS re:Invent?

For people who do not know: AWS re:Invent is the annual conference hosted by AWS in Las Vegas. This event brings customers together, engages with the community, and enables to learn more about AWS at the largest gathering of the cloud computing community. This week is all about new announcements, valuable technical sessions, and fun after-hours activities.

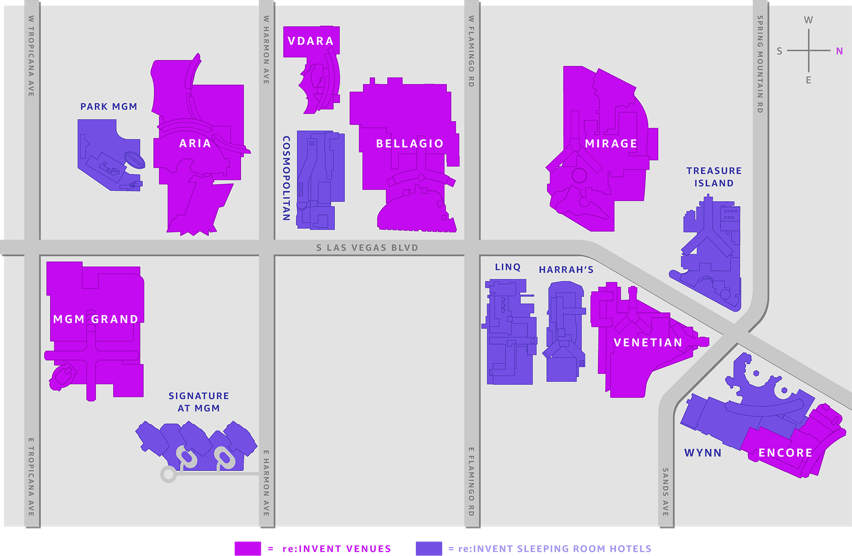

Besides sessions, workshops, chalk-talks and other activities there are also expo’s and even a certification lounge in which AWS certified engineers can meet each other and share experiences. Sessions will be hosted all across the Las Vegas strip in some of the most famous hotels.

Read our experiences below! (Go directly to Day 1, Day 2, Day 3, Day 4, Day 5)

Day 1

After we scoured the Las Vegas strip on Sunday and got our badges we were ready for Day 1! The vast number of participants and the sheer size of the conference is nothing compared to what I had experienced so far in the Netherlands.

AWS employees are on every corner of the street and inside the many hotels to guide you to your next session. Buses are driving up and down the street every 10-15 minutes to get you there on time. Great organization!

I attended the following sessions:

- Top Cloud Security Myths - Dispelled

- Beyond the basics: Advanced Infrastructure as Code Programming on AWS

- Just do it: Nike's Journey to Real-Time Monitoring of its Digital Business

- Serverless Stream Processing Pipeline Best Practices

We also went to the opening of the AWS Expo, I’ll explain a bit more about that later.

Top Cloud Security Myths - Dispelled

The first session was intended for managers and engineers of all levels of experience in using the AWS Cloud. We talked about the thirteen most frequently asked ‘myths’ about AWS Cloud Security. Curious to hear them? You can find them below, divided into three sections. If you would like to hear the myths busted, feel free to contact me.

General Cloud Security:

- The AWS Cloud is not secure!

- When I put data into the Cloud, I lose ownership of that data and AWS can use the information for marketing purposes

- I am a highly regulated business and I cannot use the Cloud because of my compliance requirements

- My business requires sensitive personal data, I cannot use the Cloud

- I have a requirement for security testing, I cannot do that in the Cloud

Service Security:

- All of my operating systems are patched automatically in the Cloud

- I cannot use the Cloud to store sensitive data because everyone will have access to it

- I hear about secret keys being stolen, the way you grant access is not secure!

- I cannot control the deletion of my data and I cannot verify if it has been deleted

- Serverless services are not secure because they are shared between customers

Data Security:

- The government can access my data at any time

- A malicious insider can look at my data via your shared administrative access

- It is possible to bypass your isolation technology and access someone else’s data

We can use this information to talk to potential customers that want to partner with us to start their AWS adventure and have questions about potential security risks and mitigating them.

Beyond the basics: Advanced Infrastructure as Code Programming on AWS

To me, this was the most interesting session of the day. We learned new things that we can immediately use with our customers. It started with an explanation about the difference between declarative and imperative programming and how they can both be used within AWS CloudFormation.

The session continued with the new technologies that have been and are being developed within AWS CloudFormation. One of them is called ‘Macros’. Some of you who have experience with Azure ARM and AWS CloudFormation might know that Azure provides options to implement imperative programming, something that could not be used within CloudFormation easily. Until now!

What are CloudFormation macros?

Macros are short-hand, abbreviated instructions that expand your CloudFormation templates automatically when deployed. They are essentially small Lambda functions running any already supported programming language. Here’s a summary of what macros can help you with:

- Add utility functions such as iteration loops or string processing (e.g. upper-case, lower-case)

- Create global variables

- Ensure that resources are defined to comply to our standards

The macro’s are readily available through Github.

At Itility, one of the things we missed was being able to iterate over resources since the ‘old’ way at AWS was just simply copy-pasting the resource. Now we can use macros to do that for us.

Another important subject for us is the tagging of resources. It helps us group resources together, but is also used for cost-reporting that we share with our customers. Instead of having to specify the exact same tags on each resource, you can now define a simple line of Python code within your template that does just that for you.

Want to start using it for yourself and start testing?

AWS recommends setting up a Cloud9 in-browser IDE to start exploring the new functionalities that these macros provide. https://aws.amazon.com/cloud9/

How to use Imperative Programming?

Imperative programming is rather new with AWS CloudFormation and to ease engineers into it they have created the ‘Cloud Development Kit’. Multiple language adaptions are already supported, such as:

- Troposphere (Python) - https://github.com/cloudtools/troposphere

- GoFormation (Golang) - https://github.com/awslabs/goformation

Again, feel free to reach out to me for more information about this topic.

Just do it: Nike's Journey to Real-Time Monitoring of its Digital Business

Onto the next session, during which two of Nike’s engineers explained how they started their journey of making their applications production-ready and everything that comes with it.

It was nice to see Nike used SRE’s Four Golden Signals of monitoring distributed systems. This will be a topic that is going to be handled during one of our own SRE trainings. Learn more about the topic here. Nike uses the ‘crawl-walk-run’ method – as they called it.

- Crawl – start thinking about what is important to monitor and what do you want to get paged for. Knowing is always better than not knowing.

- Walk – what impacts our customers?

- Run – look at what you actually value, and measure those things instead

At Nike, their run measurement is: shoes per second. Meaning: how many shoes can Nike sell per second during peak load when they are releasing a new shoe (for example). Their peak? A staggering 140 shoes per second!

Serverless Stream Processing Pipeline Best Practices

In the final session we attended today, Intel explained how they are developing a new solution to monitor people with neural disabilities. Very interesting to learn about the application itself and how it functions instead of the way they implement it.

This session was a summary of what tools Intel uses to stream all their data into AWS using AWS’ predefined services such as Kinesis Data Streams, Lambda functions, and S3 buckets. I’d hoped this session would go a bit more in-depth, but it didn’t. Nevertheless, it was a good ending to our day.

The Expo

After our sessions it was time for the opening of the AWS expo. This is a giant hall in which lots of companies around the world explain how they use AWS and how it can benefit us as well. It was a nice opportunity to grab the first pieces of ‘swag’ from the multiple stands.

VictorOps had a stand and we went there to talk about any new upcoming features. Recently bought by Splunk, the current focuswas the many Splunk integrations. I also was able to discuss some new features that I’d like to see.

Maybe the coolest thing that happened today was meeting our AWS hero. He is the person who taught us everything we needed to know about getting AWS certified: Ryan Kroonenburg!

This marked the end of our day. Talk to you tomorrow where we have more news on AWS!

Day 2

After an early alarm and a much needed coffee (still jet-lagged), we’re ready for re:Invent day #2. Today I had a couple of topics on the agenda, including:

1. Operational Excellence with Containerized Workloads Using AWS Fargate

2. Drive Self-Service & Standardization in the first 100 Days of your Cloud Migration Journey

3. From One to Many: Evolving VPC Design

Operational Excellence with Containerized Workloads Using AWS Fargate

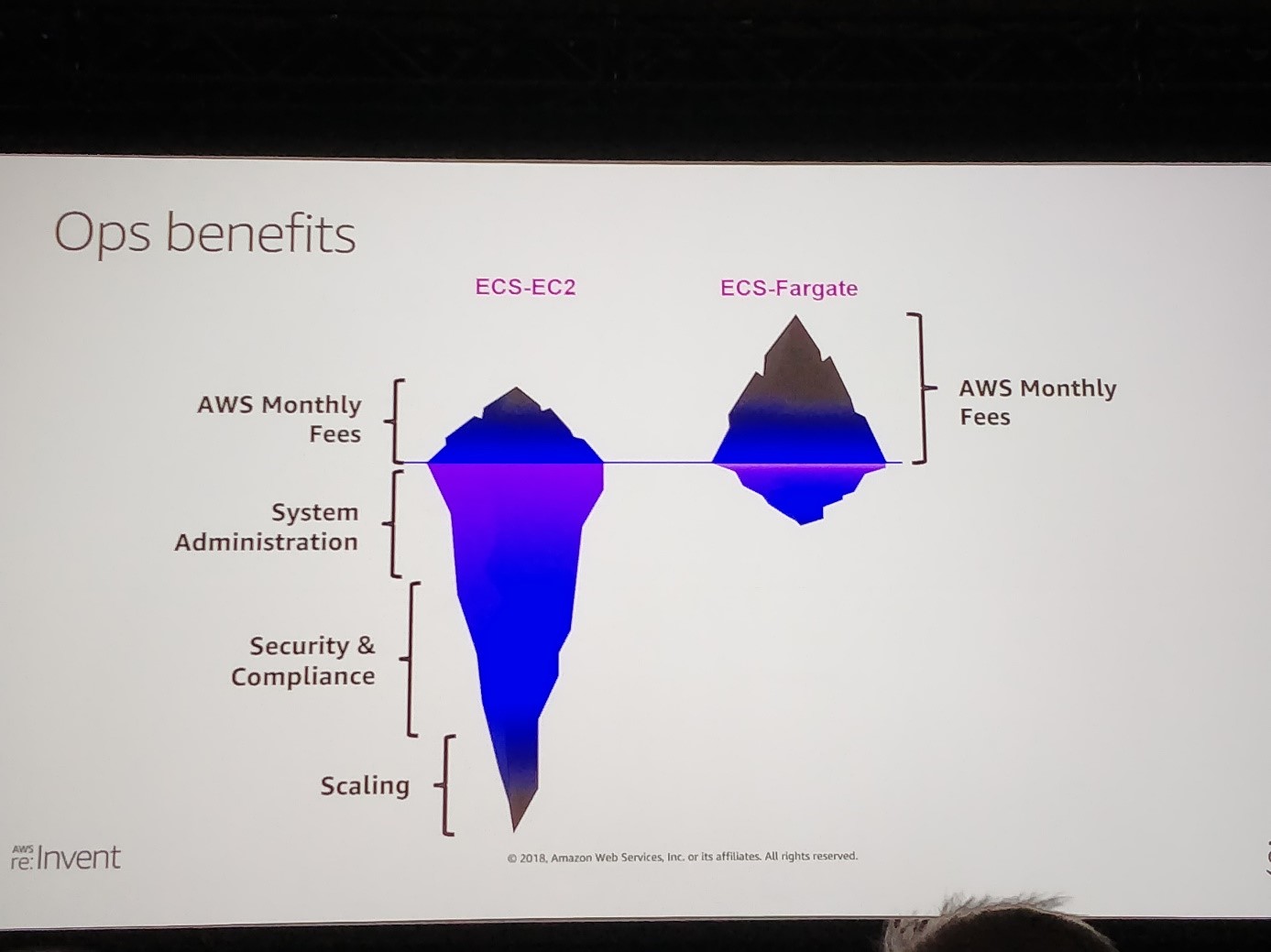

The first session of the day was about AWS Fargate and how to leverage Fargate and its serverless approach to deploy and manage containerized applications.

Fargate is a compute service of AWS that allows users to run containers without having to manage the servers or the cluster underneath it. AWS Fargate can spin up containers in seconds and scale almost endlessly by adding more containers.

This session was hosted by a company called Datree that creates testing software for your infrastructure. Basically, you create rules and guidelines for you cloud environment, and when you want to deploy a new application, your infra-as-code will be tested against those rules. If your application is designed within the predefined rules, the deployment can continue.

Because Datree delivers their product as a SaaS solution, they needed a fast, reliable and elastic compute environment to run the rules and guidelines against your application deployment on demand. They choose to use AWS Fargate to run their service and create new containers for every request that comes in.

AWS Fargate is already being used within ICC and our customers, so although it was really interesting to see how other companies consume the cloud and create fast scaling application using AWS Fargate, no new features were shared.

AWS Fargate may seem more expensive than a simple EC2 server. However, you do not have to manage the underlaying server, security and scaling.

Drive Self-Service & Standardization in the first 100 Days of your Cloud Migration Journey

During this session, GoDaddy – a domain hosting company with over 77 million domain registrations and over 330k dns queries a second – shared their journey of migrating their application to the public cloud. Because GoDaddy has a lot of separate teams (130+) and every team wants to manage their own application infrastructure, GoDaddy had to find a way to standardize the creation of the AWS accounts for every single team. AWS Service Catalog is the solution!

AWS Service Catalog uses portfolios to create rules and defaults for every (new) AWS account and enforces the users to build and deploy by these rules. Because GoDaddy has a lot of separate accounts, AWS Service Catalog helped them to deploy the exact same configuration for every account; Landing zone, costs governance, security settings, billing repots, logging endpoints.

Migration intake processes can take a lot of effort, therefore GoDaddy decided to create a self-service portal for their internal teams. This enabled the teams to quickly create their new application environments in AWS. But instead of having all the freedom of the public cloud, AWS Service Catalog kept them from coloring outside of the (policy) lines.

From One to Many: Evolving VPC Design

The most interesting session of the day was about VPCs. VPCs are Virtual Private clouds (virtual networks) in AWS. Most companies have 10’s to 1000’s of VPC’s running in their AWS accounts and connect them together using VPC peering. Connecting a lot of VPCs together using VPC peering is overcomplicated and brings challenges when you have VPCs in multiple AWS accounts and connections to your on-premises datacenters. This week AWS announces Transit Gateway to make this easier!

AWS Transit Gateway is a service that acts as a central point between your VPCs, on-premises datacenter, remote offices and gateways. AWS Transit Gateway makes it easier to manage routing and security because it’s (mostly) centralized in one place.

I’m excited to get hands-on experience with this new feature as it will help us and our customers to improve speed, maintainability and security for traffic between VPCs and on-prem.

Benelux drinks

After our sessions it was time for the Benelux drinks, a party hosted by the AWS partners in the Benelux. Time for some drinks and connecting with customers and old colleagues. Since there was a pool table present, we had to show off our skills and challenge some competitors in a game of pool. (we won 😉)

Day 3

The day of the first keynote required an extra early start since it started at 8:00AM at the Venetian – a 45 min walk. In a room that could fit quite some people, we were lucky enough to sit almost at the front of the stage!

The keynote was held by Andy Jassy, CEO of Amazon Web Services, and contained many new releases on the AWS platform. In this blog we’ve picked some of which we think could benefit Itility and our customers on the ICC platform immediately.

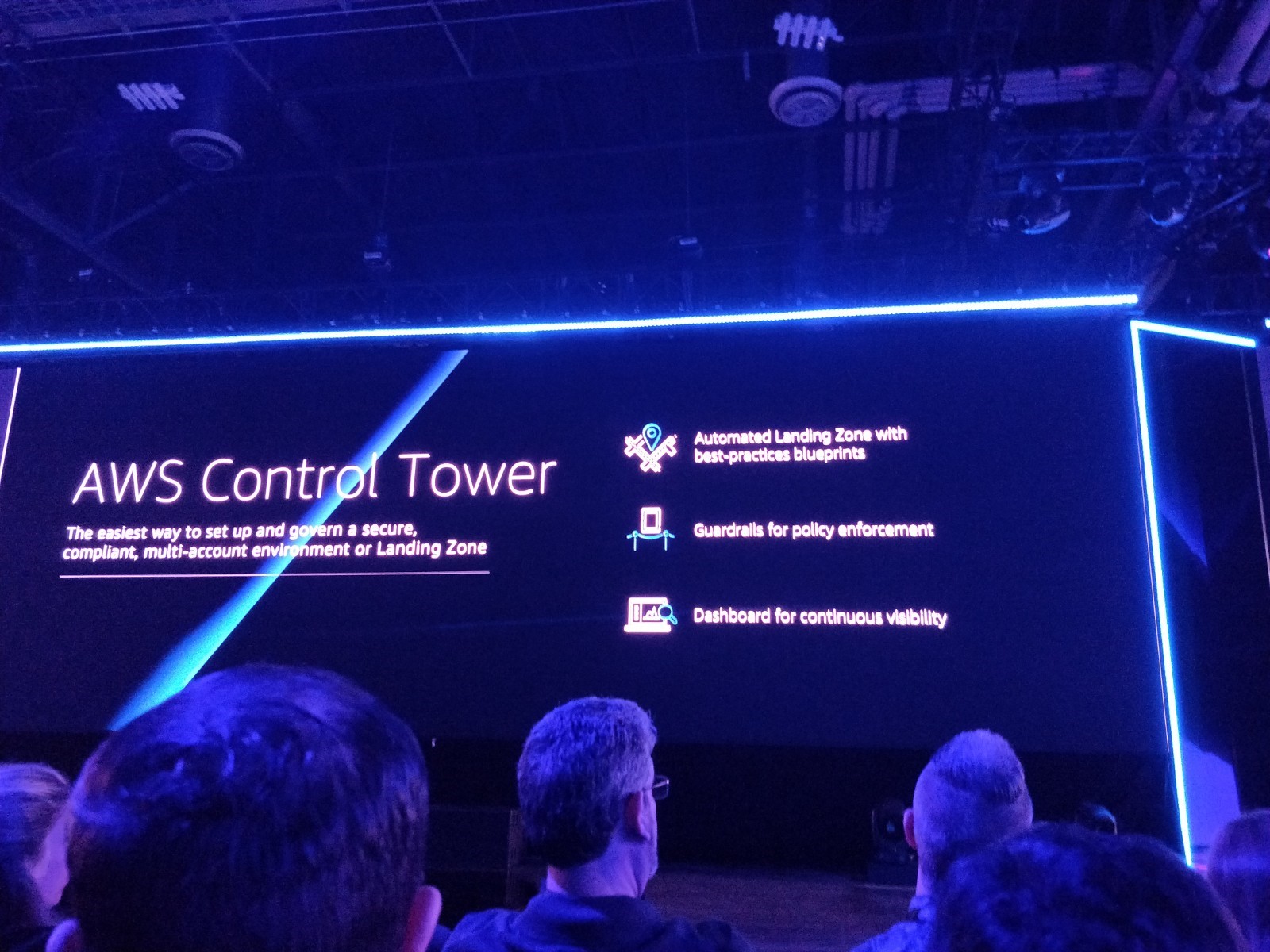

AWS Control Tower

Before AWS Control Tower it was quite a hassle to manage multiple accounts within one organization. Accounts within the organization could defer quite a bit and there was no central location to manage and control these accounts to make them compliant to the organizations’ controls. AWS Control Tower fixes just that.

AWS Control Tower is basically an automated landing zone with best practices blueprints. It enables a company to set up and govern a secure, compliant, multi-account environment or landing zone. Key features that were named are:

- Configuration of a multi-account structure using AWS Organizations

- Managing identities with AWS Single Sign-On or Microsoft Active Directory

- Federate access with AWS Single Sign-On

- Centralized logging using AWS CloudTrail or AWS Config

- Cross-account access using IAM

- Implement a best practices network design using VPC

- Configuration of an account factory through AWS Service Catalog

This means that while new accounts are being created, they will automatically be compliant to the companies’ standards and best practices.

Find out more about AWS Control Tower.

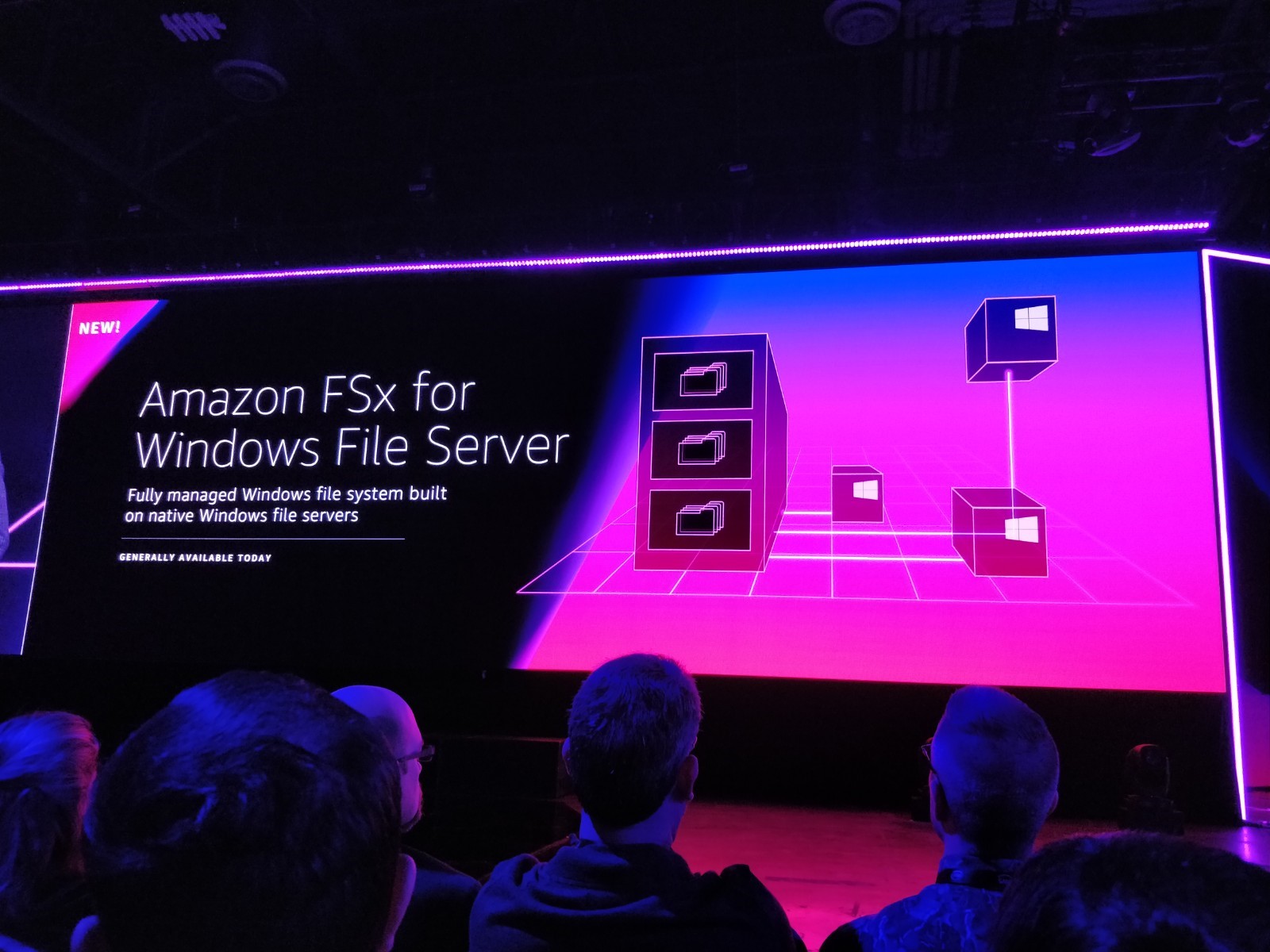

AWS FSx for Windows File Server

Another announcement that we can benefit from immediately is Amazon FSx. It was currently not possible to use Windows file servers easily when using AWS. The Amazon FSx makes it possible to do this. Amazon FSx is a fully managed Windows file system built on native Windows file servers.

Key features that were named are:

- Compatibility with AD, Windows Access Control, and native Windows Explorer experience

- No hardware or software to manage

- Up to 10s of GB/s throughput with sub-millisecond latencies

- Secure and compliant including PCI-DSS, ISO and HIPAA

Find out more about Amazon FSx.

AWS Security Hub

While Itility and ICC are focusing more and more on security and how to measure and monitor it, AWS released a new service called AWS Security Hub. This allows companies to centrally manage security and compliance across an AWS environment. This service is the direct counterpart of the existing Azure Security Center that is already being used by ICC.

Key features that were named are:

- Save time by aggregating alerts generated based on monitoring you’ve set up regarding security and compliance

- View a summary of prioritized issues

- Automate compliance checks to detect deviations against industry standards.

While it is currently in preview, it is completely free of charge. One has to enable AWS Config though, another service from AWS. It would be a good idea to use this tool to do a first ‘scan’ of our AWS environments, not only our own but also that of our customers to help them become more secure and compliant.

Find out more about AWS Security Hub.

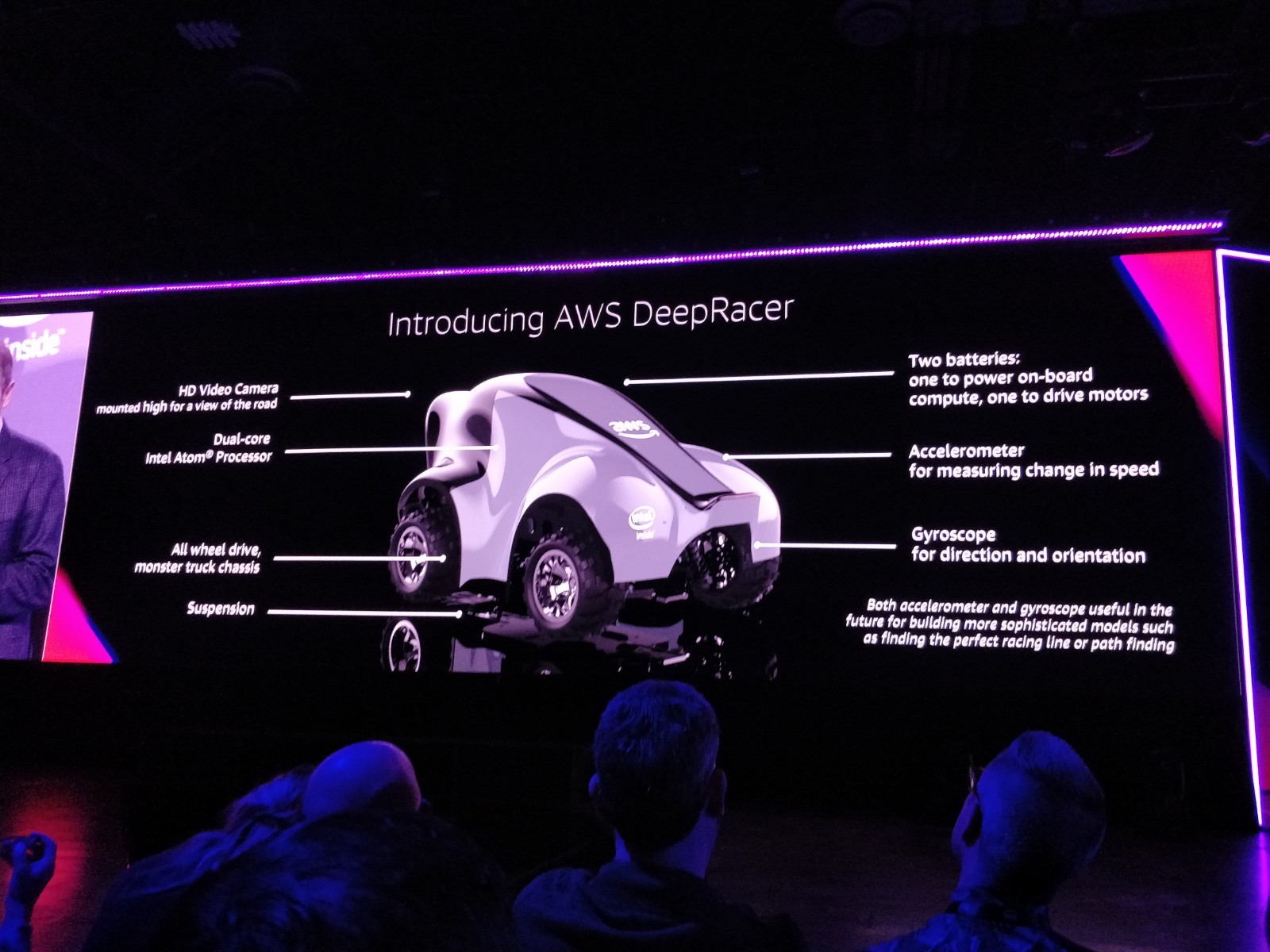

Amazon DeepRacer

A totally unexpected but pleasant announcement was the Amazon DeepRacer. A global, autonomous racing league using race cars not bigger than a shoebox that can be trained using reinforced learning.

AWS provides a virtual simulation for the race car where it can be trained. The league comprises of 22 races, across the globe at AWS Summits where anyone owning a DeepRacer can participate and see how well their virtual simulation training has been performing.

The winners of each DRL race and overall top-scorers will compete for the championship cup at re:Invent 2019! Virtual tournaments will be held throughout the year.

Kjeld sent an image to Marianne who was immediately excited about this little race car and asked him to put it in his luggage! Are we going to compete in these races? Hopefully we will soon find out, I am already excited! Let the naming contest begin!

Find out more about Amazon DeepRacer.

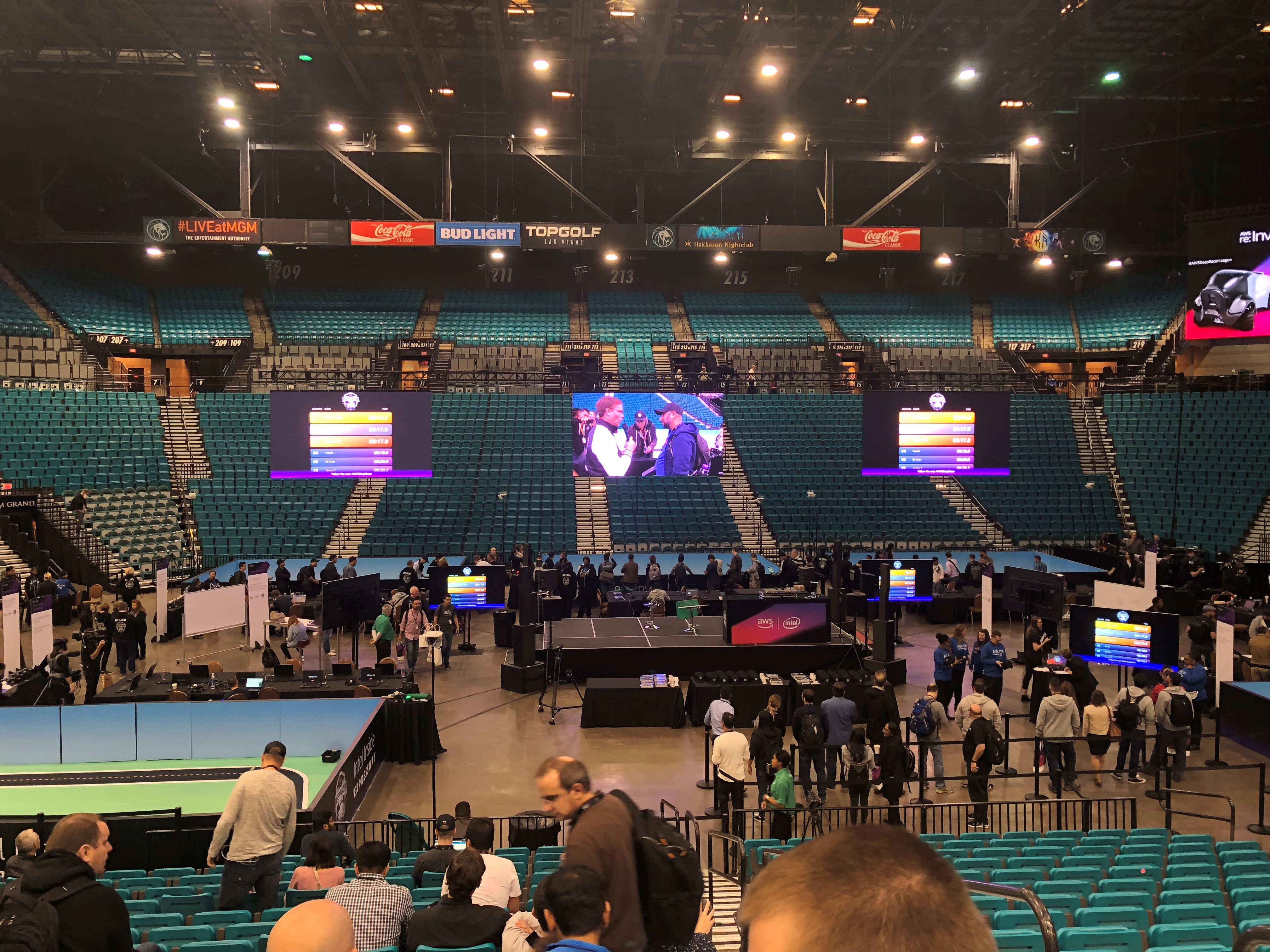

During the announcement Andy told that a 2018 competition would start at the MGM Grand Arena where engineers could already start working with the DeepRacer. It was fun to see the cars in action and to see some people already being able to steer their autonomous race car across the track without going out of bounds. The top 3 will compete tomorrow before the key-note of AWS CTO Werner Vogels.

Some other releases that are worth mentioning:

- AWS Outposts – Making it possible to have AWS hardware on-premises fully-managed and tailored to your specific needs. Useful for applications in which low latency is paramount

- AWS Lake Formation – Making it possible to build a secure data lake in days

- Amazon Time Stream - A fast, scalable, fully managed time series database.

Read a full overview of all new announcements during AWS re:Invent.

In the afternoon we walked along the Expo again in search of AWS experts to talk about migrating existing systems to AWS. We’ve been working on a migration plan and we’d like to talk to some experts to validate our current strategy.

We’ve also visited the AWS Certification Lounge where we collected some extra swag to bring home. Hopefully it all fits in our suitcases.

Building PaaS with Amazon EKS for the Large-Scale, Highly Regulated Enterprise

In the afternoon, I went to a session about how Fidelity – a company with over 800 DevOps teams – makes it possible for developers to have their own Kubernetes cluster including authentication and authorization using EKS (Elastic Kubernetes Service) and the IAM authenticator.

The talk touched the basics of EKS and went more in depth on how Fidelity is able to build containerized applications in a highly regulated industry like the finance sector.

It was nice to see that Fidelity used the exact same approach regarding the platform structure of Kubernetes and usage of tools like we do currently at CDL.

Want to know more about the sessions that we go to or interested in other topics? Take a look at the AWS YouTube channel. It already contains quite some sessions, more will be uploaded every day.

This concluded the end of our 3rd day already, time is flying by. Be sure to follow us while we go into the final full day of AWS re:Invent 2018, starting with a key-note from CTO Werner Vogels in the morning. Hopefully he’ll come with some nice new releases!

Day 4

AWS Re:Invent day 4 already, again an early start today since the 2nd keynote with Werner Vogels (Amazon CTO) started at 8:30AM in the Venetian. The keynote didn’t contain as many new releases as the one from yesterday, but still a lot of fascinating topics. Because todays keynote was again a long one, we’ve picked a couple of topics that are interesting and/or could benefit our customers on the Itility Cloud platform (ICC).

After the keynote we had a couple of technical sessions on Elastic File system, Self-Healing Lambda functions and ended the day with the big Re:Play party (You’re never too old to play..).

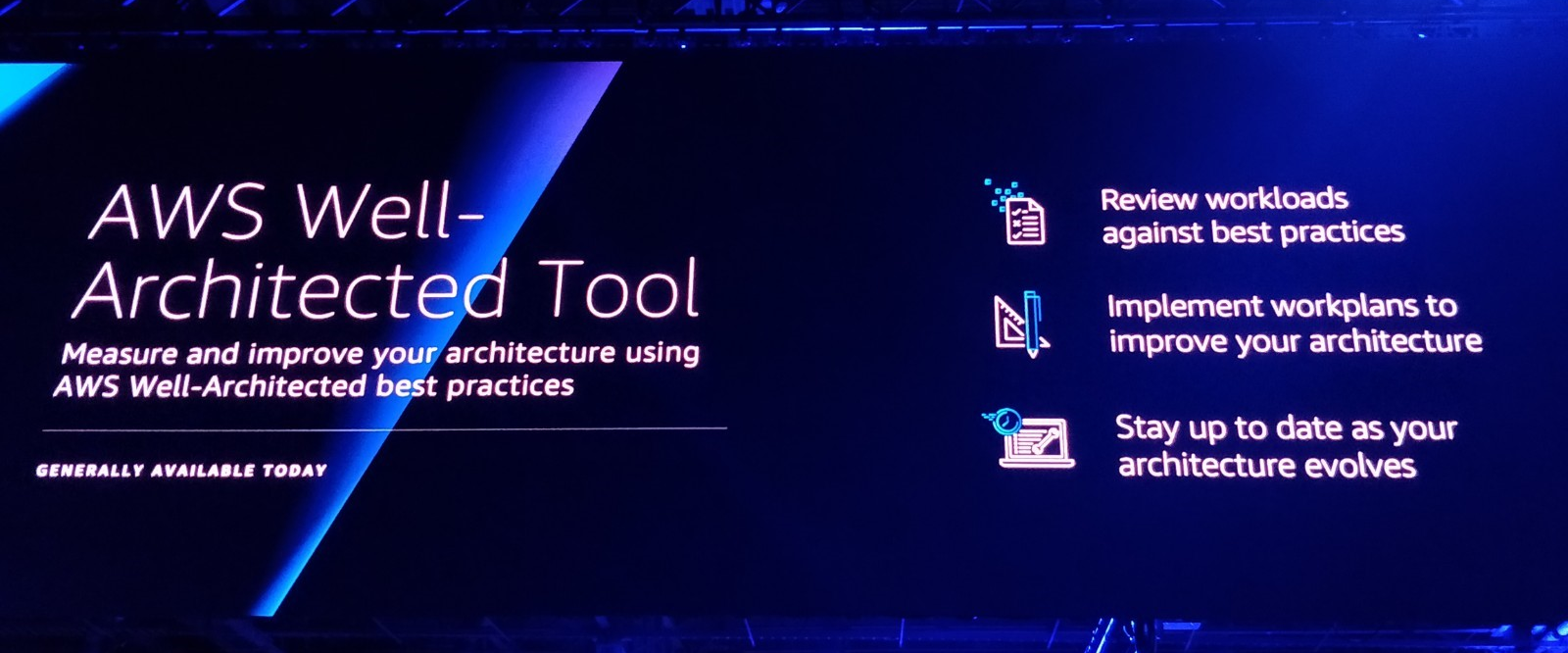

AWS Well-Architected Tool

Cool new announcement today: The AWS Well-Architected tool. We have been familiar with the AWS Well-Architected framework for quite a while now. We did Well-Architected reviews and applied the best practices of AWS to our own ICC- and customer environments. However, it can take quite some valuable time to go through the Well-Architected Framework to test your workloads against the AWS best practices (80+ pages). Available from this moment, AWS Well-Architected Tool will save us all a lot of time to keep our own and our customers environment up-to-date with the Well-Architected best practices created around the five pillars: Security, Reliability, Performance Efficiency, Operation Excellence and Cost Optimization. Really excited to start using this tool to make our lives easier and improve our managed environments even more.

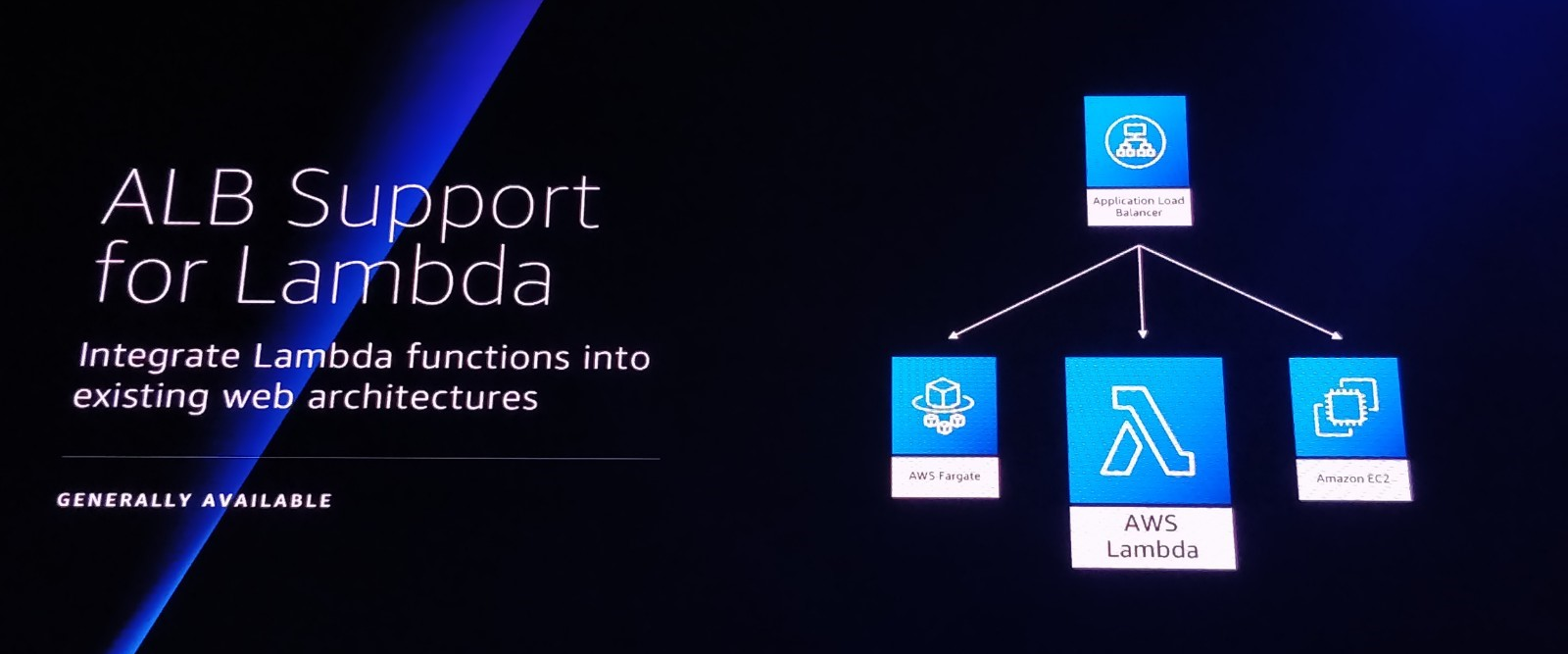

Application Loadbalancer (ALB) Support for Lambda

Another fresh announcement: Application Loadbalancer support for Lambda Functions. While I do not see a direct use-case within our services it brings a lot of cool new possibilities. Think about running your web applications serverless using Lambda, and use an ALB as a frontend with a HTTPS endpoint. It’s possible to route to a different Lambda function based on the URL path or the host. As I mentioned before, I do not see a direct use-case for this feature but let’s take some time to play around with it and see if it could bring us any value for our web- or api services.

Shift-Left SRE: Self-Healing with AWS Lambda Functions

In this session the speaker Andreas Grabner spoke about a couple of ways to improve the quality and decrease the error rate when deploying new software to a production or acceptance environment. From an SRE point of view, Andreas showed how he only monitors the metrics that are relevant to his application and that have immediate impact on customers. Instead of a normal pipeline (test > deploy > merge code), Andreas created a much more functional and reliable way of deploying new code;

1. Unit tests.

2. Deploy application code to acceptance.

3. Monitor the relevant metrics for +/- 10 minutes to make sure the new application code did not break anything.

4a. If everything is functional as desired: Merge code into acceptance/master branch. And trigger this same pipeline for production.

4b. If any metric is outside of the set boundaries, rollback deployment and create Jira ticket for the developer.

I believe we can create a lot of value when we would also start thinking like Andreas; measure only the metrics that matter, set boundaries for those metrics, rollback code automatically when metric thresholds are exceeded. In our case, a quick win would be to add an extra step to our puppet config pipeline. This step should monitor the puppet-agents and if puppet runs start failing because of the latest change, revert it automatically and inform the developer(s) by creating a Jira ticket or bug.

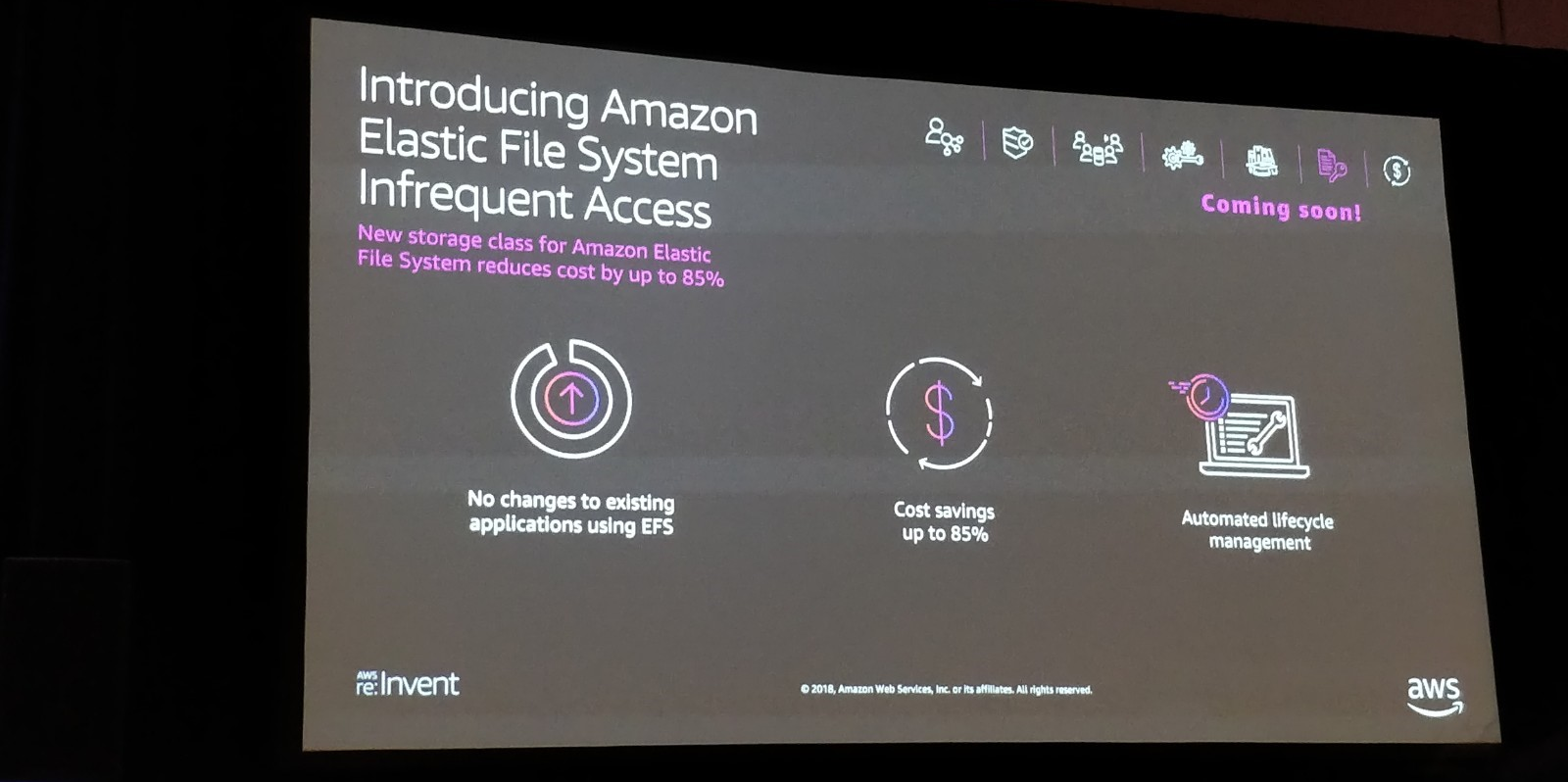

Elastic File System (EFS): Infrequent Access

AWS Elastic File System (EFS) is a simple, scalable and elastic file system for Linux-based systems. (Amazon FSx was announced yesterday for Windows servers). While EFS has been around for a while now, Infrequently Access has been announced as a new feature. A really useful addition to EFS because it can save you a lot in costs if you have a lot of data stored in EFS but is used rarely. This new feature sounds extra interesting because we passed over EFS in the past because the costs wouldn’t be beneficial.

Re:Play

We ended our day at the AWS Re:Play party. Werner Vogel didn’t lie when he told us “You’re never too old to play”. Games of dodgeball, archery tag, an office chair race and laser gaming entertained us until the late hours.

Day 5

After a good nights’ sleep following the AWS Re:Play party we headed out to our final sessions of the conference in the Mirage. It has been quite a week full of new experiences, knowledge gathering, socializing and overall enjoying the conference. The organization was a well-oiled machine.

My final session was a chalk-talk about the newly announced Amazon FSx, a fully-managed Windows native filesystem. While they are working hard on extra features it seems we cannot use this service at our customers right now due to some lack of features. Some examples:

- It is not possible to connect the filesystem to your on-premise servers via DirectConnect. Meaning that only servers running inside the AWS environment are able to use the file system.

- It is not high-available from the get-go, you have to set this up yourself using guides AWS provides.

- Migrating to the FSx filesystem is a tedious job where you have to ZIP your current filesystem and upload it to S3. Next up, download it on a windows server in your VPC connected to the new filesystem and unzip the files.

Hopefully AWS releases a roadmap that shows the way-forward regarding Windows file systems on AWS, we can really use the service at our customers.

After our final sessions we walked back to our hotel where we packed our bags, and each went our separate ways in the afternoon, relaxing a bit and/or going to the casino. Las Vegas is not all bad for your health, we walked quite a bit!

For our final night we booked tickets to go see Fleetwood Mac in the T-Mobile arena next to our hotel. This concluded our AWS re:Invent conference and our trip to Las Vegas. Check out the AWS website for a global overview of the re:Invent Recap edition 2018.

I hope you all liked reading this blog and if there are any questions, come find us at HQ or send an email (info@itility.nl).

Make sure to sign up below and receive more updates about the events we visit and inspiring stories in your inbox.