Our experiences at VMworld US 2018 - detailed post

We are travelling to Las Vegas to dive into the latest trends, developments, state-of-the-art technologies, and to learn from experts. And we won't keep it all to ourselves — keep an eye on this blog where we will share our experiences about the VMworld US 2018.

VMworld?

The number-one event for enabling the digital enterprise. It's the destination for cloud infrastructure and digital workspace technology professionals. It brings you five days of innovation to accelerate your journey to a software-defined business — from mobile devices to the data center and the cloud.

Which sessions would you like us to join? Let us know by leaving a comment below and we'll share our experiences.

Day 5.

The last day of VMworld 2018!

I have to admit that I am quite exhausted by now. I have learned a lot in these 5 days. I gained information about handy tools to use, VMware cloud foundation, VMware Validated Designs, Considerations when migrating to cloud, VMware Cloud on AWS, Performance best practices for vCenter Server Appliance and much more.

As indicated before, according to VMware’s research more and more customers will be choosing for the Hybrid Cloud form (combination of on premise cloud and public cloud). This made me curious about how to deal with this. Therefore, today I focused more on the business considerations side when migrating to the cloud.

So what do customers actually want? When migrating to the cloud, customers want a simplified journey. But unfortunately this journey comes with lot of migration dilemma’s, such as:

- What goes and what stays? (on prem vs in the cloud)

- Which cloud will it go to?

- What applications need to be transformed vs simply moved?

- What methods will we use to migrate or to transform?

- What tools will we use?

- What must be migrated together (dependencies)

- What parts must be migrated vs what must be re-build?

- What remediation is required?

- How fast can we get there?

- How much does it cost?

- Will there be any downtime?

- How do we minimize the impact on the business?

Besides the dilemmas the customers have to think about considerations when migrating to the cloud. There are 3 main considerations categorisations where customers should think about:

- Security & Compliance

- What impact does security have on my applications in the cloud?

- Do I need to reconfigure my application in the cloud?

- Do I need a new set of policies and firewalls?

- Do I meet the compliance regulations / GDPR?

- Scalability & Performance

- What are my performance requirements within the cloud?

- What are my bandwidth requirements for applications?

- How should I design my cloud environment for best performance of my applications?

- Are there any management considerations?

- Agility vs Cost Tradeoff

- What are the operational costs when migrating to the cloud?

- What is the business risk and impact?

- What are the risks?

- What are the advantages?

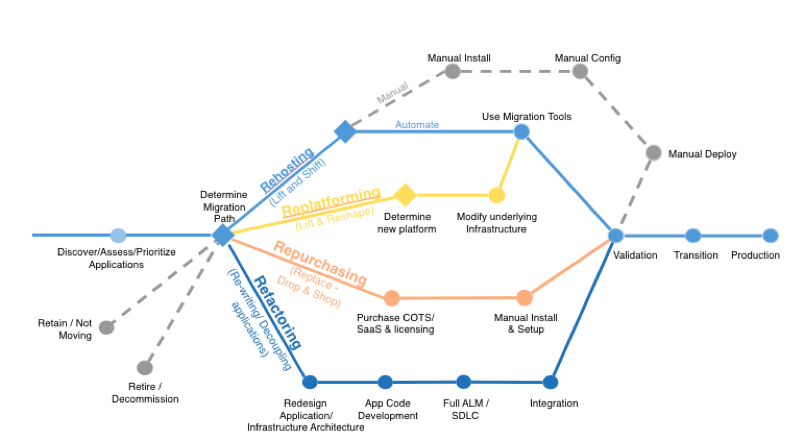

6 R’s Migration Strategies

Different migration strategies can be used to migrate to the cloud:

- Rehosting

This is also is known as the lift and shift method. You just migrate the workload to the cloud without performing any additional modifications on it. - Replatforming

Within this strategy form you perform some minimal modification on the application before moving the workload to the cloud. This in order to achieve some benefits. - Repurchasing

Here you choose if it’s necessary to use of a different product. For example, you can choose for a SAAS (Software As A Service) solution. - Refactoring / Re-Architecting

Using this strategy, you go in-depth about how the application is architected and developed. This really is a step towards application modernization (step to optimize workload) - Retire

Here you select the applications which do not need to be migrated because for example, the application is not used anymore by anyone within the organization. - Retain

- The retain strategy is when the to be migrated applications are set “on hold”. In case of expected decoms for example.

What VMware offers

VMware offers a solution called HCX (Hybrid Cloud Extension) which allows you to seamlessly migrate workloads to the cloud. This tool allows you to have a simple journey to the cloud with zero downtime and no re-ip of applications. HCX is available when customers purchase VMware Cloud on AWS. VMware Cloud on AWS is a full VMware SDDC (Software Defined Data Centre) which is running on cloud AWS. This with the same vCenter management and same interfaces. There is nothing new to learn.

As can be seen from the above, the journey to the cloud brings some challenges along the way. You need to think carefully about the many aspects and steps you take before migration to the cloud can be accomplished.

One should also think about incompatible vs non-interoperable stacks. For example, a customer with an on-premise environment is running on vSphere 5.5 while the cloud they want to migrate to is running on a higher version. So how do you deal with this? Secondly there are network and security constraints. You actually want to retain your IP. Thirdly how do you deal with applications with dependencies? You have to take care of the entire workload.

My key learning point during these sessions was that customers would like to have a simplified journey to the cloud, but there are a lot of considerations, challenges and decisions where one should think about on a long-term basis. In my opinion, this should really be planned meticulously. It’s probably possible to make use of tools to migrate to the cloud but then you just migrate a workload “as is”. The workload is just rehosted within the cloud. The next step should be the preparation of the application for use in the cloud by optimizing or even re-architecting it.

Resources to get you started:

https://cloud.vmware.com/community

https://cloud.vmware.com/cost-insight

https://vmcsizer.vmware.com

I hope you enjoyed reading my blogs. If you have questions please just comment / leave a message.

We will update this page shortly with the official summary of the evening.

For those who want to experience VMWorld themselves: next year VMworld 2019 takes place in San Francisco.

Day 4.

Today i have joined some interesting sessions that related to vCenter Server Appliance (Availability and best performance), Scalibility of automated UCS infrastructure, Free tools to troubleshoot an vSphere environment, VMware validated designs and ESXTOP as utility.

Journey to vCenter Server Availability and Recoverability

In the past VMware was offering VMware Heartbeat. This product was able to protect the vCenter server against outages and failures. The EOA (End of Availability) of this product was in June 2014. After that VMware did not really offered a new product against the protection of the vCenter Server. So what happens if vCenter is not available? This is actually dependent on the products which are integrated with vCenter Server. One thing is sure, in case of unavailability of the VC the workloads (ESXi hosts and VM’s) keep running. So there is no impact on the workloads itself. Although there is an impact on products like vRealize which are linked with the vCenter Server. In the case of unavailability of the VC, new port groups for example can’t be created until the VC is operational again. This is because vRealize makes use of the VC to perform changes.

Since vSphere 6.5 VMware integrated a feature of vCenter HA (High Availability) on deployment of the VCSA (vCenter Server Appliance). This feature is only available for the vCenter Server appliance, not for the windows machine. So how this feature works on high level? When this feature is enabled a three-node cluster (Active, Passive and Witness) is deployed. It is important to mention that the vCenter HA feature is not a DR solution. It is an availability solution. Availability within a VC cluster can also be gained by for example just making use of ESXi HA. The difference is that ESXi HA is hardware driven while VC HA is software driven.

- The Active node is the node that is currently operational.

- The passive node has the same configuration as the active node. It’s a clone of the active node.

- The witness node is a smaller footprint than the active and passive node (but it still has about 13 disks).

In case the active node goes down, we are all good. The passive nodes take it over in approximately (5 minutes). If the passive node and witness node goes down the active node will protect itself and will completely shut it self-down. In the case a node fails there are actually two options you can perform: 1: redeploy the node 2: Fix the failed node.

Some VCHA design patterns:

Node placement

- Each node should be deployed on different hosts – DRS Anti affinity

- Each node should be deployed on separate datastores – keep VCSA disks together

Networking

- Private network can be L2 or Layer 3

- Private network must be separate from management. Vlan or subnet

- Latency should not exceed (10 ms) across VC’s

Misc

- Cant be replaced with other witness nodes such as vSan witness

- Make use of vSphere VM overrides. Set the VC VM’s to the highest priority

Important notes:

Since vSphere 6.7 U1 there are also APIs available for vCenter HA

There are two supported backup methods. File based backup and Image based backup. File level backup is by default included in VCSA. If you have installed your VCSA, the file level backup can be accessed by making use of the VAMI (https://vcsa:5480). The Image based backup does not look deeper into services when making a backup while the file level backup does. This is to guarantee that a representative restore can be performed.

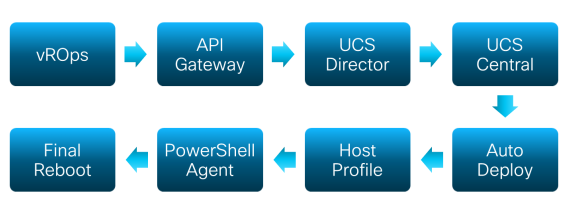

Infrastructure on Demand with VMware and Cisco UCS

This session was a use case of Alaska Airlines. The use case was focused on the path the engineers of Alaska Airlines followed to scale up the infrastructure using automation. The engineers realized that using automation scalability could be performed in an easy way while avoiding human errors and so thus keeping consistency within the environment. The goal of the engineers was to create an Infrastructure On Demand (a 100% VMware host delivery process).

High level process overview

As depicted above the engineers made use of: vROps, API Gateway, UCS Director, UCS Central, Auto Deploy, Host Profiles and the PowerShell Agent of UCS Director.

High level seen vROps (vRealize Operations Manager) creates an alert when a cluster has a minimal of CPU and / or memory resources. Based on that alert a workflow is being started which finds out which hostnames, clusters etc. should be used for the new ESXi host. UCS director makes use of this output e.g. the hostname and cluster name and starts the workloads. For example if storage is needed a workflow regarding storage will be started. UCS Central is being used to finally spit up the server. Then Auto Deploy starts deploying the ESXi host. Configurations on the ESXi host will then be performed using the host profile. These are configurations like syslog dir, network coredump settings, ip address settings etc. etc. Afterwards a PowerShell Agent fills in the identity information of the ESXi host. The identity consists of the IP’s, Vlans, Subnets etc. etc. of the ESXi host. After that the ESXi host reboots and voila it is working without any human touch.

Above I've mentioned the process in a nut shell. A white paper has been published which includes all the details of each step within the process overview. The whitepaper can be found on:

https://blogs.cisco.com/datacenter/alaska-airlines-infra-automation

Ensure Maximum Uptime and Performance of your vCenter Appliance

Two tools are by default included within the vCenter server and can be used to ensure maximum uptime and performance of your VCSA (vCenter Server Appliance). These tools are: 1: VAMI tools and 2: Vimtop.

An acceptable performance for your VCSA are dependent on:

- CPU and Memory metrics

- Network performance

- Storage performance

Vami tools

In the vami interface you can monitor the CPU&Memory, Disks, Network and Database. Vami can be accessed using https://vcsa.fqdn:5480

Vimtop

Vimtop monitors the process running within the VCSA. Vimtop is like ESXtop but it is used to monitor the VCSA. Vimtop uses a subset of esxtop switches. To be able to run Vimtop you can use SSH to get to the VCSA and in the command line type vimtop. Shortcut keys like in ESXtop can be used to get the output you wish e.g. press ‘p’ to pause, ‘h’ to see the help screen and ‘q’ to quit. Vimtip can be used for historical analysis, because data can be exported. The exported data can be for example imported in Windows Perfmon to analyze historical data.

Some Recommendations

- Keep the CPU utilization under 70%. It can spike up, but ideally it should be less than 70%.

- Keep the memory below 80 – 90 %.

- Monitor the disk size of the VCSA. Keep the disk below 80% – 90%. A full disk can cause big problems.

Troubleshoot and Assess the Health of VMware Environments with Free Tools

I was very interested in the free tools VMware recommends to troubleshoot and assess the health of VMware Environments. Below given a list of tools which came across this session:

- vRealize Log Insight

For now vRealize Log Insight is free. EOL (End Of Life) is next year. Using this tool you can get a lot of interactive events, hosts, errors etc. etc.

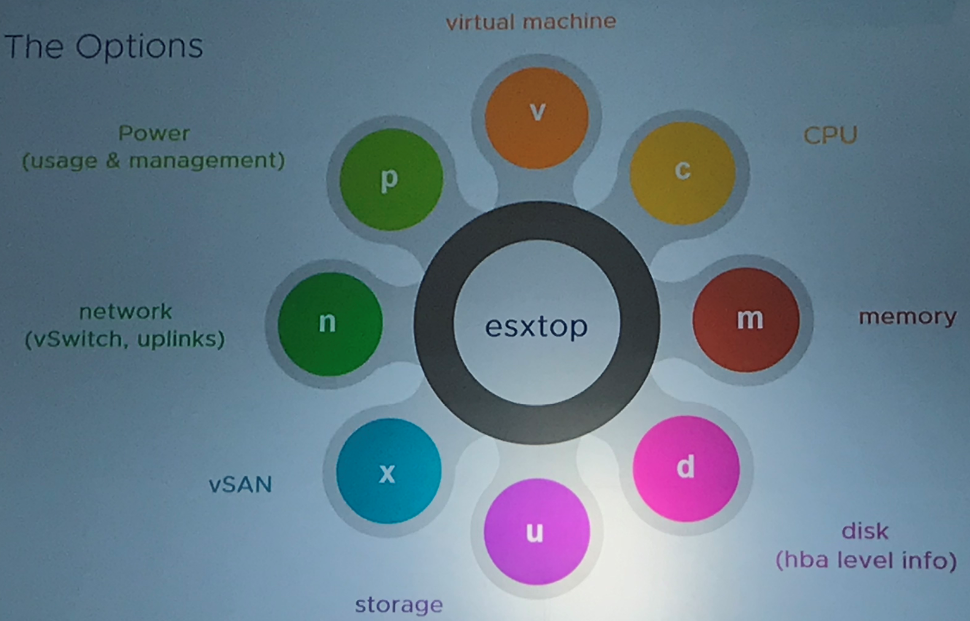

- ESXTOP

This is a utility built in into ESXi. With this Utility you can gather a lot of data without the need to analyze logs

- Community Tools

- vCheck

This is a powershell HTML framework script. It can run manually or as a scheduled task. Output can be as an HTML web page or email - RVTools

This is a windows .NET 4.0 application which uses the VI SDK to display information about your virtual environments. Almost same information like vCheck - VEEAM One Free

This tool is similar to vRops - VMware PowerCLI MP for Linux & MAC OS

- vDocumentation

This is a PowerCLI script that produce infrastructure documentation - Vester

This is a tool to easily validate and remediate your vSphere configuration

- vCheck

Updates regarding above mentioned tools will be visible on: http://www.explorevm.com/

VMware Validated Design for SDDC: Architecture and Design

VMware has launched VVD (VMware Validated Designs) for a while already. The latest version of their VVD is version 4.3. But what actually is a VVD? A VVD is a set of documents (almost 1500 pages) which provides blueprints about how to plan, deploy and configure SDDC (Software Defined Data Center).

Within these documents there are almost more than 380 design decisions that relates to the whole SDDC stack.

The SDDC stack consists of the building blocks:

- vSphere

- vSAN

- NSX

- vRealize Suite

- Other

Even when you do not plan to use the whole SDDC stack it can be very useful to consult the VVD design documents. They can serve as a reference book.

Reference urls

High level representation of all key aspects within VVD can be found on:

- https://vmware.com/go/vvd-sddc-poster

- https://vmware.com/go/vvd-diagrams

- https://vmware.com/go/vvd-stencils

- https://vmware.com/go/vvd-docs

For those who are interested, theVMware has a VMware community. Furthermore there is also a VVD community which contains other relevant information.

ESXTOP Technical Deep Dive

As an Infrastructure Engineer I am quite familiar with ESXTOP and so do I make use of this tool when necessary. Given the fact that I am aware that troubleshooting can be performed using ESXTOP I was interested in this Deep Dive. For the one who are unfamiliar with this tool: ESXTOP is a built-in command line tool. It provides real time information on resources utilized. It is a key tool for troubleshooting performance issues. It can be runned remotely through vMA or from an ESXi host. The only caveat is that it does not contain history data.

To access ESXTOP just perform ‘esxtop’ on the ESXi command line and you can go ahead.

There are a lot of options which can be used. Below, some key option for use are mentioned:

In this deep dive I have learned that a lot of troubleshooting steps can be performed on the ESXI host. This is a pretty powerful tool. The only key is to understand the threshold of metrics. There are a lot of thresholds, values, percentages etc. etc. which can be obtained. To be able to troubleshoot properly you really need to know what the values mean and how they deviate from their default.

Good reads are:

- ESXTOP parameters and its uses

https://communities.vmware.com/docs/DOC-9279

- ESXXTOP threshold values

http://yellow-bricks.com/esxtop

Day 3.

Today I have been able to attend a few nice sessions again. The sessions mainly had a focus on topics concerning: APIs, Network DRS, vRA (vRealize Automation), and the application of certificates within vSphere.

Don’t sleep on RESTful APIs for vSphere

I registered for this session because I guess I am really sleeping on RESTful APIs (I mean I am really unfamiliar with APIs for vSphere). As an infrastructure engineer I was very curious what vSphere APIs are and what added value they have.

Very short RESTful API overview

REST stands for Representational State Transfer Application Program Interface. REST can be seen as an architecture style, it’s not a standard. REST is stateless (which means that the server or client does not need to know the state of each other). Nowadays a lot of programming languages already talk REST e.g. PowerShell, Python, Java talk REST out of the box.

So what is the terminology within REST?

- CRUD: Create, Read, Update, Delete

- Safe vs Unsafe:

- Safe: where data will not be modified (read)

- Unsafe: where data will be modified (CRUD)

- Idempotent

- Identical requests result

- Caveat: create

A REST call consists of the following components:

GET: This is the method, the action that should be performed

vcsa.fqdn/rest: This is the endpoint which should be consulted

vcenter/vm: This is the resource

vm-15: This is the parameter where we are looking for

Different methods can be used for a REST call. These can be:

- UPDATE, GET, PUT, DELETE, PATCH, and POST

- GET is about GETTING information from the server

- PATCH is to update the information

- DELETE is when you want to delete something

- POST is about something you want to create

Explorer can be accessed by navigating to:

https://<vchostname>/apiexplorer <https://%3cvchostname%3e/apiexplorer> (will be accessible when vCenter Server has been deployed).

vSphere RESTful API

This is a developer and automation friendly API and interface that simplify automation and development. So what you can do with it? You can for example make changes to DNS, create accounts, check health of the appliance, monitoring services and statistics, configure and test aspects like DNS, Hostname, Firewall Rules, Routes. For a VM you can for example perform HW configuration like boot properties, connection statuses like Ethernet, SATA and SCSI devices. You can also perform CPU and memory Updates, perform Disk operation, Life Cycle and power management etc. On a vSphere Management level, you can for example obtain information about Clusters, Datastores, Folders, and Networks. On vCenter site you can for example repoint your PSC from external to external, repoint PSC registration, perform stage 2 configuration etc.

Not everything is possible yet e.g. snapshot management. But VMware is busy trying to include these options.

Start using the APIs

Interested to start working with APIs? Since vSphere 6.5 VMware has a built-in ApiExplorer. This explorer provides a list of all APIs that are possible. If you have installed your vCenter Server in your own environment, the Explorer can be accessed by navigating to: https://vchostname/apiexplorer

VMware code ApiExplorer:

https://code.vmware.com/apis/

Topics regarding APIs have interested me in any case. I would certainly like to continue working with this topic.

Closer Look at vSphere programming & CLI Interfaces

So we have been talking about the APIs. But what is actually the need of APIs?

- APIs can be used for automation purposes

- Product integration e.g. second or third-party integration

Clustering Deep Dive 2: Quality Control with DRS and Network I/O Control

A long time ago vSphere only had DRS which was based on CPU and Memory. Nowadays vSphere also provides NIOC (Network IO Control). NIOC is a default enabled feature when creating a new VDS (VMware Distributed Switch). By default, NIOC consists of some default traffics types. No new traffic types can be added. Default types are for example VCSA traffic, Management traffic, vMotion traffic and VM Traffic.

Within the traffic different share types exist. By default, the VM traffic type will get the highest priority. This only kicks in when the physical NIC is fully saturated. You can also make use of limits. The typical recommendation is not to use limits. Although for specific situations limits can be used e.g. for backups.

The important note hereby is that if you set a limit it will set the limit per uplink adapter. For example, if you have one ESXi host with two plink network adapters and you set a limit of 3 GB, the ESXi host total limit will be 6 GB. Because limits are enforced per uplink. NIOC can be currently only applied to ingress traffic. For egress traffic one can make use of traffic shaping.

In my opinion vSphere has proven DRS based on CPU and Memory. DRS based on Network Utilization is still in progress. There are enough challenges on the network DRS part as this seems to be more difficult than normal DRS, which is acting on CPU and Memory.

Uncover the power of vRealize Automation

So far I have unfortunately not worked with vRA (vRealize Automation). As automation is already hot for quite a long time, this topic interested me. I was interested in the possibilities of automation on vSphere. Summarized vRA makes use of blueprints. A blueprint can be example used to deploy VMs.

In the blueprint settings you can for example specify the number of instances a user can enroll, the VM names etc. can be set. There is also a possibility to specify how VMs should be deployed. For example if the blueprint needs to make use of full clone or linked clone which are based on a snapshot.

There are three kinds of blueprints which can be deployed with vRA:

- Software components. These can be used to specify which application must be installed on the instance.

- Machine blue prints are used to just deploys an VM with a OS.

- Custom service blueprint to customize properties.

The nice thing about vRA is that you can automate deployment of VMs. Besides, you can also specify which applications must be installed on an instance by making use of workflows. vRA makes use of vRO (vRealize Orchestrator) to perform additional workflows. Workflows can be linked together. For example roll out VM x and perform workflow Y like install application Y. In such a way a VM is ready to be used when it is deployed. No additional actions are needed.

Custom Properties can be used within vRA to allow you to unlock extra options which are normally unavailable. For example, if you want that VMs end up in a specific VM folder this is normal not possible as option in the vRA blueprint. Using the custom properties this is now possible. You have many different custom properties like the creation of snapshots. By default, only one snapshot is normally allowed (custom properties reference can be consulted to see a list of all custom properties)

vRA has drawn my attention. At Itility my colleague and I will soon work on a test project that will focus on the integration of Puppet with vRA to manage application delivery. I really look forward to start testing.

Deciphering successful vSphere certificate implementation

I have experienced that customers want to apply vSphere certificate implementation. Since vSphere 5.5 I came into familiar with this topic. One thing I can say here is that this really was a tough process. That’s why this session interested me.

Previously certificates were issued to each service (SSO, vCenter Service, Inventory Service, Web Client). Manual implementation had to be performed. Since vSphere 6.7 it’s all automated by now. You can now renew / refresh certificates using one button in the vSphere 6.5 HTML5 client. Pay attention though that this is only possible in the HTML5 client. This feature will probably not become available within the vSphere Web Client as the web client is also deprecated.

VMCSA (VMware Certificate Authority) supports the following options for deployment:

- VMCA as a certificate authority (default deployment)

- Hereby all certificates are signed by the VMCA. The main benefit is that this deployment option costs minimal administration.

- Make use of an external certificate authority

- All certificates will be signed by the trusted root CA. The disadvantage of this method is that six separate certificates are needed

- Make use of VMCA as a subordinate (least preferred)

- The root signing certificate for the VMCA is a subordinate. Disadvantage is that in any certificate signed request VMCA will be included in the chain

- Make use of a hybrid solution (combination of VMCA & external CA). This is the most preferred solution

- Hereby the endpoints (vCenter and PSC) will make use of CA signed certificates while solution users will make use of certificates signed by the VMCA. The main benefit hereby is that external facing certificates are signed by the root authority.

VMware admits that there was a lot of ambiguity regarding the certificates. They have now published a list of requirements for all imported certificates. This includes best practices as minimal Key Size, what values must be filled in during CSR (Certificate Signing Request), unsupported settings, use of weak hash algorithms etc.

For me the certificate process seems much more simplified than before. Especially the renew / refresh process of the certificates with the use of one button within vSphere HTML5 client. Unfortunately, you still need to login to the CLI for the CSR (certificate Signing Request).

Day 2.

General Session CEO VMware: Technology Superpowers

Today the CEO of VMware (Pat Gelsinger) provided a general session. This year is VMware’s 20th anniversary celebration. VMware is shaping the future of cloud, mobile, networking, and security, as well as innovating in emerging areas like IoT, AI (Artificial Intelligence), Machine Learning, Edge Computing, and Containers. It was very impressive to attend the presentation.

For those who are interested, you can review the keynote general session here.

Container and Kubernetes 101 for vSphere Admins

Yesterday we had an introduction and demo regarding Kubernetes on VMware. The session of today elaborated on more high-level concepts like Container, Docker, and Kubernetes. Although this session had similarities with the session presented yesterday, the repetition was not experienced as frustrating. I guess because of the fact that “the power of repetition is in the repetition”. Today I have learned the following about Container, Docker and Kubernetes.

Container

The classical stack makes use of Compute, Network and Storage. The hard problem we are facing when making use of this classical stack relies on the “the operating system & dependencies” level.

Here the term containers comes in play. Containers give us the advantage to create multiple applications without OS dependencies. A container can be seen as the core application function.

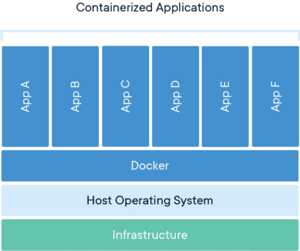

Docker

So then what is Docker? The benefit of Docker is to package applications in containers allowing them to be ported to any system. Containers actually virtualizes the Operating System. It splits up the OS into virtualized compartments which makes it possible to

run applications in containers. Docker commands are being performed within a Docker-host which exists within a VM. Many different containers can be created within a Docker-host. The picture on the left should give a clearer idea.

Figure 1: source Docker website

Kubernetes

Kubernetes is one of the orchestration tools for managing and maintaining containers. Other available tools are: Kubernetes, Mesos, and Docker Swarm. So why is Kubernetes needed, actually? The answer is actually very simple. It’s easy to deploy Docker images, but running 1000s of images while maintaining them, Docker is not convenient. For this an orchestration tool is needed, like Kubernetes. Making use of an orchestration tool as Kubernetes has advantages for management tasks e.g. DNS, Load balancing, Traffic Control, Scheduler, and Control Manager.

vSphere Clustering Deep Dive, Part 1: vSphere HA and DRS

The concepts of HA and DRS are clear for the VMware administrators among us. This session provided a deep dive on HA and DRS. For those who are interested, I have got a book on this topic (signed by Niels Hagoort, Frank Denneman, and Duncan Epping). So comment below if you want to read more.

Some vSphere HA basics:

vSphere HA works in case of failure on one of the following levels:

- Compute

- Storage connection

- OS and application

In vSphere 6.5 some additional HA features are introduced:

- Restart Priority

Previous releases had 3 different priority levels. The level indicates in which priority the VM should be restarted on the HA ESXi host. In 6.5 there are 5 different levels. The levels ‘Lowest’ and ‘Highest’ are added. Now you can specify when the next batch of VMs need to start. You have different selections to choose from. One of them for example is to restart the next VM when a previous VM is powered on. - Restart dependency.

Here you actually specify HA DRS rules. The main difference between the restart priority is that a whole of group (VMs) need to be started before the other HA group of VMs will be started. If one VMs in the group is not started yet, the other group of VMs will not start either. That’s why it is preferred to make use of Restart priority instead of Restart dependency - Admission control. There are 3 algorithms to reserve resources:

• Percentage based, the most flexible option and preferred option. Calculation is based on the amount of resources being used for each running VM.

• Slots based. This was previous the default option. Calculation is based on the highest VM resources.

• Failover host. Hereby you specify a specific host which will not be used within the cluster. Not recommended, only in particular cases.

Troubleshooting

The Fdm.log is mostly stored in /var/log. This log is important when analyzing if a host failed and why HA occurred.

Features regarding failures

VM Component Protection is one of the features that protects against storage failure. There are a few failures:

Permanent device loss (PDL).

Analyzing for troubleshooting can be done in fdm.log, vmkernel.log, fdm.log of the master ESXi host.

All paths down (APD). It takes about 5 minutes before VM restarts.

Analyzing for troubleshooting can be done on the fdm.log on the APD impacted host, vmkernel.log (here you can see that APD state has been entered),

Proactive HA is also one of the new features in vSphere 6.5, that describes what should happen with a host if something happens on the hardware. This feature detects hardware conditions by receiving alerts from the hardware itself

Some DRS Basics

DRS was first introduced in 2006. DRS is based on active part of CPU and Memory. The idea of DRS is to provide the VMs with the best resources it demands. But time has changed in 2018. In vSphere 6.5 there are 3 new DRS options:

- VM Distribution

This distributes VMs evenly across the cluster. This makes use of number of VMs as metric, not the size of the VM. This is a best effort feature. You will see a better distribution across the cluster. - Network Aware DRS

This feature considers network utilization of hosts and VMs for load balancing and initial placement operation. Network Aware DRS does not balance if host network utilization is unbalanced. Network Aware DRS is more efficient when making use of VDS (VMware Distributed Switches). - Predictive DRS

This makes use of predictive data. DRS receives a forecast of behavior for the upcoming 60 minutes and distributes the VMs to be ready and to satisfy the demand. For example, If a VM daily uses a specific amount of memory on a specific time, predictive DRS will make sure that the VM will be placed on an ESXi host where it can make use of these resources.

vSphere SSO Domain Architect Unplugged

This session was actually pretty interesting compared to different recommendations from VMware I experienced in the past. This session indeed cleared up some topics to become prepared for the future PSC architecture. Some news:

- Deprecated

• Windows vCenter Server is deprecated. There is a migration tool to migrate from windows to the Appliance. Windows vCenter Server will not be available in the next major release. Only the appliance (VCSA).

• vSphere Web Client (flash) is deprecated. HTML5 client will be fully operable in vSphere 6.5 U1.

• vSphere 5.5 End of support September 19, 2018. There is no direct upgrade path from 5.5 to vSphere 6.7 - New

• In vSphere 6.7 repointing is possible to a different SSO domain between external PSC’s. In vSphere 6.7 U1 repointing is possible to a different SSO domain between embedded PSC’s.

• There is a converge Tool

• This to migrate from External PSC to Embedded PSC

• CLI based tool

• If you have a load balancer. Make use of the load balancer IP address within the converge tool. The tool will

automatically select one of the PSC’s.

You first perform a migration using the convergence tool and after that you decom the old PSC.

- Recommendations

• VMware recommended to make use of external PSC in the past. The recommendation now is to make use of embedded PSC. The only reason to be in PSC external mode is when you make use of vSphere 6.0 and vSphere 6.5 before U1.

It is recommended to make use of backup* before performing a architecture PSC change. Backup must be:

- Image based

- File Level based à included in Sphere 6.5

Recommendation is to keep a maximum of 100 milliseconds between VC’s. Otherwise performance issues can be experienced

Recommendation for upgrading

- First upgrade to vSphere 6.7 U1

- Than perform the PSC architecture changes. Before 6.7 U1 you need to perform more tasks. Before decommissioning, note all solutions which are talking to the PSC. Otherwise decom can be fatal. For example, when making use of NSX.

*Note: do not make use of snapshots for PSC’s. This is not the same as an image or file level backup.

HA PSC

Since vSphere 6.5 vCenter has an HA solution. vCenter HA is based on an Active Passive solution.

vCenter HA is also used for HA of PSA. The vCenter HA makes use of:

- A Active VCSA

- A Passive VCSA

- A Witness VCSA

If 2 modes fail, then VCSA shut it self’s down. Max latency must be 10 MS between all three nodes.

Note: vCenter HA is not a DR solution. So you still need to perform a backup. If the VC / PSC is for some reason corrupted than the corruption will be replicated. So you still need a backup and recovery solution. File based backup is introduced in vSphere 6.5. File level backup looks (checks) at services before it backup up the appliance. If a service for example is down the backup will not be created. Because if the VC is down this is not representative when performing a restore.

VMware Cloud Foundation Architecture Deep Dive

Yesterday I attended two sessions included with the subject of “Cloud”. Currently I have less experience with Cloud, but I have ambitions to gain more knowledge so I registered for this cloud deep dive session. VMware Cloud Foundation is a SDDC (Software Defined Data Center) solution. Cloud foundation makes use of cloud foundation builder VM which is a photon OS VM that is delivered as an OVA. This OVA helps deploying a full SDDC stack which is based on VVD (VMware Validated Designs).

A basic setup can be performed within approximately 30 minutes.

Architecture Overview

The cloud foundation architecture consists of the following building blocks:

- Compute (vSphere)

- Storage (VSAN) (VSAN as External storage supported)

- Network (NSX) (External Managed Switches)

- Management (vRealize Suite)

VMware Cloud Foundation makes use of automated workload deployment. Core components included within VMware cloud foundation are:

- SDDC Manager

- vSphere (vCenter/PSC/ESXi)

- vSAN

- NSX

vRealize Log Insight

When making use of this SDDC expansions like cluster expansions e.g. extra hosts is done in few simple clicks. Installing and configuring is not needed. This will be done for you.

This solution really made me curious about its possibilities such as ease of workload deployment, management, ease of migration, automated LCM, Expansion etc. Technically seen this really looks like a promising solution where I want to focus on.

Looking forward to tomorrow!

Day 1.

Today was the first day of the event. First impression: wow! First of all, it’s very nice to be in Vegas and to gain knowledge, but what is this different from my previous local joined sessions in the Netherlands. The location itself, ambiance, crowd, organization, and the location are all very, very different.

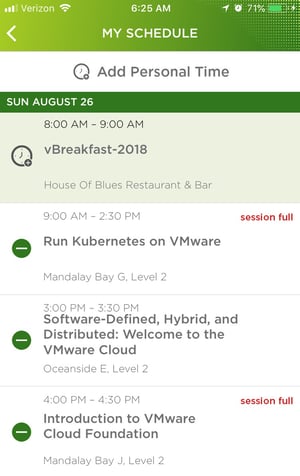

In a nutshell, the agenda topics for my colleague and me included the following:

1. Run Kubernetes on VMware

2. Software-Defined, Hybrid, and Distributed: Welcome to the

VMware Cloud and Introduction to VMware Cloud Foundation

3. Future of vSphere Client (html5)

4. Welcome Reception

Run Kubernetes on VMware

This session was our first joined session. As infrastructure engineers, Kubernetes isn’t one of the topics we are being involved in, in our daily life. This session included a nice introduction. VMware is offering two methods to make use of Kubernetes on VMware. These are:

- PKS (Pivotal container Service, GA since Feb 2018)

- VKE (VMware Kubernetes Engine, available since Q32018)

The main difference between these two methods is that VKE is more like a SAAS service. So it’s kind of a KAAS (Kubernetes as a service) solution. The PKS solution is a self-managed environment while the VKE is a fully managed environment. During this session we had the possibility to play around with VKE (while it is not officially released yet).

Software-Defined, Hybrid, and Distributed: welcome to the VMware Cloud and introduction to VMware Cloud Foundation

The main thing we learned here is that multi-cloud deployments (also known as hybrid cloud) keep increasing these days. According to VMware’s survey multi-cloud deployments will keep increasing year after year. There is no "one-cloud-fits-all" solution. All solutions have their own specialties. VMware offers the VMware cloud provider which functions as a sort of pivot between different cloud providers. This makes it possible to have one contact point, while in the background use multiple cloud providers, such as Google Cloud Platform, Oracle, IBM, AWS, or Microsoft Azure.

VMware cloud foundation is the simplest path to Hybrid cloud. The solution makes use of VVD’s (VMware Validated Designs) and golden design standards. VMware will soon release VMware Cloud Foundation 3.0 including:

- More choices in deployment options (vendors for public as private cloud)

- Improved scaling (up to 1000 of nodes)

- Quick and easy move of workloads across clouds

- Multi-site guidance will be available for VSAN, DR, stretched clusters, cross vCenters etc.

Future of vSphere Client (html5)

For the vSphere administrators among us:

vSphere HTML5 client is the future of VMware vSphere. The planning is that in Q3 the HTML5 web client must be fully featured (100% ready as what is possible with the vSphere web client). The latest HTML5 update will show you PowerCLI, commands for every operational tasks being performed of the client.

Welcome Reception

It was solutions-exchange-time. Here we were able to meet VMware partners and have some food and fun like playing games, networking with each other, and time for some goodies of course.