Making hidden costs visible for you to right size your cloud-based platform

A nightmare scenario for every CIO is the lack of performance or capacity for their IT landscape. Besides the fact that it will cost you money because it will slow down or disrupt your business processes, it leaves you with dissatisfied customers and employees. But the answer is not to set up your company with the double amount of capacity, just to be sure. You will end up going down a slippery slope of hidden costs, loss of overview and impracticable operations.

Before you can make any well-founded decision on the actual capacity needed to run your application landscape smoothly, you need insight into the facts. Facts about the capacity (e.g. number of CPU cores, memory size) you have up and running, how much and when that capacity is used by the applications within the business and how the use is spread over time (e.g. are there any big spikes or dips in the CPU utilization).

We created a Cost Control Tool to provide meaningful information for the right sizing of virtual machine resources. The tool creates reports where it only takes one look to see how much money you could have saved if your virtual machine (VM) had been right sized. For example, it combines the used computing power against the amount of computing power you have available. In other words: would downscaling be feasible?

To get a better understanding of how we provide this information, let us dip our toes in a typical data lake approach. Starting with the classic approach to measure the utilization of your CPU (and thus the number of cores you need to keep everything running). Usually, this is calculated in terms of averages within a given time frame, let’s say a month. You can imagine this will never give you an actual image, because the effects of big spikes or dips are smoothed out when using an average number.

So, next would be a more advanced approach. Zoom in on the data (in this case the CPU utilization of every minute of a full month) to be able to draw conclusions about the CPU capacity that is needed most of the time, and the exceptional occurrences of the big spikes. Giving you the insight to make the right choices on how to size your IT landscape. It is even possible to calculate a certain error margin, for example: you accept that for some big spikes, that occur so little, you won’t have the full capacity available causing the process to be executed a bit slower. We realize that it is hardly ever acceptable if there is not enough capacity, so with our Cost Control Tool you can make a well-founded decision and motivate the costs made. It gives you the information you need to find the balance between optimal capacity and cost efficiency.

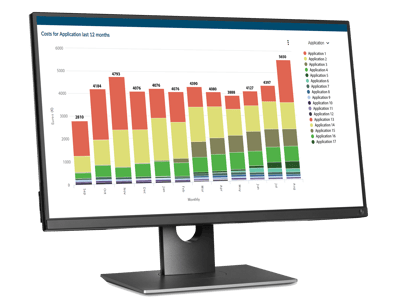

Cost Control Tool - Costs for applications last 12 months

Of course, you can’t rely on data based on just one month. But with the collected data from a longer period of time, the Cost Control Tool actually enables you to predict how much capacity you will need in the upcoming months. You can down- or upscale your virtual machines accordingly, translating directly into higher or lower operational costs.

The CPU utilization is only one element in the big IT picture. We extrapolate this same approach to calculate for instance your optimum memory and storage capacity. The best thing is: you can save costs by arranging you IT environment as efficient as possible, with minimal impact on the end user.

Learn how we can transform your IT operations together with Itility Cloud Control