How to build a stack in a matter of weeks?

What if you have only a few weeks onsite to build a complete stack from scratch? A team of seven engineers accepted the challenge and traveled to Las Vegas to build a stack of nearly three petabytes of storage. Veteran stack engineer Dhiraj shares his experience.

Although Itility has a history of building stacks internationally, this was my first project abroad. In Las Vegas, of all places, in a Switch data center almost a quarter of a square kilometre in size. There, we set out to build a platform of almost three petabytes, linked to four UCS chassis. We were building a landing platform for our customer’s internal applications. The team consists of a project manager, two storage engineers, a backup engineer, and three stack engineers, including myself.

Some specs: the four UCS chassis make up two architectures, one for IaaS and one for PaaS. We installed 24 blade servers for IaaS purposes and six for PaaS. The environment is split in two UCS domains, one for the rackmount servers and one for the blades. As a backup system we use Rubrik with eight bricks, or 32 nodes. In other words: we have more than enough boxes.

Triple check your work

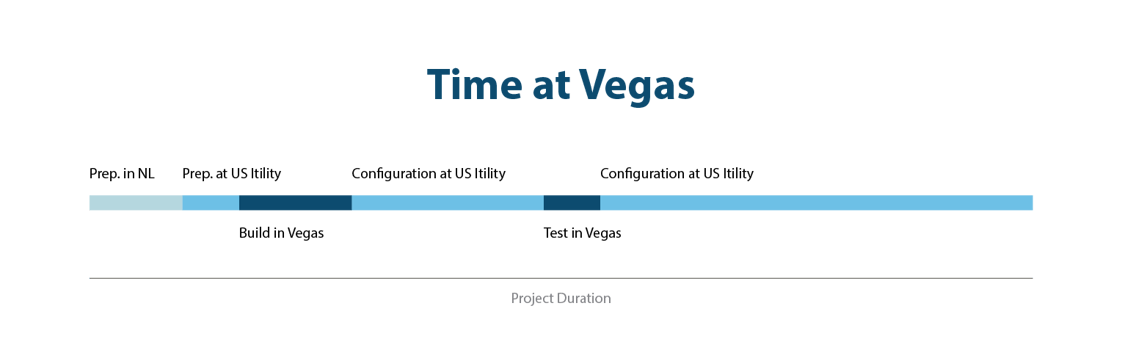

The biggest challenges we face are time and distance. A lot of preparatory work is done from our offices in the Netherlands and in the US. Once we arrive in Las Vegas, we need to hit the ground running. This is because we only have access to the data center for a limited amount of days. So, if anything goes wrong, we have to fix it on the spot.

Instead of double checking our preparation, we triple check everything, such as:

- Are all team members from Itility and vendors registered with the data center?

- Does everyone know their tasks and responsibilities?

- Is the rack design finished down to a tee?

- Is the wiring correct and the connectivity diagram dummy-proof?

- Are all the tools packed, such as the advanced toolset, magnetic screwdriver, scissors, and label printer?

- Has the label format been approved?

- Do the designs meet all Itility architectural standards?

- Have all final building blocks been approved?

We align our designs with our colleagues in the Netherlands, based on our architectural standards. These include several building blocks and automation templates, with which we connect the hardware in a standardized fashion. We also ordered all equipment in the Netherlands, because of the long lead times. In the US, we perform the final checks and ensure all teams are up to speed. Additionally, we have prepared an extensive ‘shopping list’ with necessities such as usernames, domain credentials, SSH access, and relevant IP addresses.

Secretive

With all preparations in order, we head to the data center. The terrain is immense and secured as if it were a military compound. A large wall around the data center surrounds everything in a mysterious shroud. Even the Uber drivers did not know exactly where they were driving us. Once inside, the rules and policy take some time getting used to. All equipment, for example, needs to be tested beforehand in a separate room before it is allowed in the final cage.

Hot fact on cooling

You might be wondering: why would someone place these hot servers in a desert? Why not in Silicon Valley, where our customer is located? At first, I thought it was a simple matter of cost, but the heat actually works to our advantage. Dry hot air is easier to extract from a room, which makes the cooling process easier. Additionally, this is a low-risk region with regards to environmental disasters, and it produces more energy than it uses. These benefits make this location both cheaper and more stable to build a data center.

Prepared for setbacks

No matter how well prepared you are, in a project of this magnitude you are always bound to run into challenges. The first surprise we encountered was the labeling of the racks. The label coding used in the data center was different from our own, which meant we needed to update the rack designs accordingly. We also discovered that some parcels had been delivered to other Switch locations. And at first glance, it looked like the rails we had ordered for the racks didn’t quite fit.

Then there were things you just have to deal with: all deliveries came in unmarked boxes, so we had no idea which box contained which hardware. As a result, we had to unpack and sort everything. We needed a total of 30 boxes to sort all equipment and accompanying rails, cables, screws and other accessories.

Pressure cage

Once things get going, you are everything but alone. Multiple teams – from the data center, the customer, vendors, and us – are working simultaneously. Mix-ups start to happen, especially when the Switch team unexpectedly changes their schedule, which leads to someone getting ready to work in a rack where the Switch team just happens to be testing PDUs.

At first, everything is still neat and orderly. The racks are empty, and things are quiet. As the week progresses, more devices start humming and the temperature in the cage rises. This leads to our own temperature rising as well. Especially once the schedule changes and our access to the management network layer is postponed for three days. Things get tense as pressure rises, because we need enough time to properly finish the cable management.

Triple testing

Once we step out of the data center it is time to start the actual testing of the management and infrastructure layers. Since we want to make absolutely sure that the entire chain is tested, we do this in three test cycles. Only if each test succeeds, will the data center be taken into production.

1. Platform test

The first test is the platform test, which we perform on-site in Las Vegas. We test all infrastructure components according the to our test plan. We check whether the monitoring is up, redundancy is in place, everything is labeled, if any errors pop up, if all systems have been set up according to the naming convention, and if we can log on using LDAP.

2. Power test

The remote project team tests the interfaces between the individual stack in order to detect any errors before going live.

3. Soft shutdown test

The last step is to turn the entire cage off. The Switch data center team then turns on every piece of equipment in a specific order, according to our rack design. This order is determined using dependencies in our schedule. All the tests passed successfully.

Finalizing the stack

Everything has been tested. We now have a few weeks to implement generic services through VMs. These include, among others, Active Directory, Global Directory Service, NetIQ, MFA-NAAF, Splunk, SCOM and Darktrace. Standard services we need to install in order for the customer to run their applications.

A total of 23 VMs need to be implemented, for several different teams. Because every team is responsible for their own configuration, and each person has a different approach, this takes some organization. A solid planning is, once again, indispensable. Mapping the requirements and specifications, user rights, and schedule for each VM and team.

The project was a success, with only a few setbacks along the way. This has shown to me that, as is often the case, a good preparation is half the battle. But in addition to that, the use of standardized building blocks, automation templates, and predefined script is indispensable to complete tasks of this magnitude in a limited time frame.