Container orchestration: a means to an end

Let’s be honest: running a container platform should not be a goal in itself. After all, container technology is a convenient means to an end. The goal is to support businesses in building reliable, scalable, secure, and high-performing products and services. For this reason, both IT and OT (Operational Technology) departments have widely adopted the use of container orchestration technology (e.g., Kubernetes) over the past few years.

Does this technology offer an off-the-shelf holy grail? Our answer is “no”.

The rise of container technology

First, let’s start with the basics. Why has the adoption of container technology risen so fast?

In a world that is growing more digital every day, organizations offer applications. Some meet simple customer demand (e.g., a portal for support tickets), others more complex demand (e.g., increasing factory production efficiency through the use of AI).

With these applications playing a central role in business processes, application stability is a prerequisite. After all, portals that cannot be reached do little to breed confidence among users in a community, and applications that have the capacity to bring the production process to a standstill can cause millions of damage when malfunctioning. Yet, even as stability is key, the pressure to release updates on existing features and to introduce new features keeps increasing.

The solution to cater to both needs (stability and speed) is the microservice model, in which application features are developed and released as loosely coupled pieces of code. This allows developers (often part of a Scrum team or DevOps team) to work on a specific feature in isolation from other features, without having to update the entire application when releasing a new feature. Obviously, this has advantages for the efficiency and manageability of an application. And it increases the ability to scale development teams that collaborate on a software solution. On top of that, isolated processes bring the advantage of being able to zoom in on specific features for process optimization. We will refer to these features as microservices from here onward.

Since the time-to-market of microservices should be as brief as possible, development teams require fast delivery of environments for their development and testing. And since microservices can be worked on in isolation from one another, development teams often need multiple isolated environments for their activities. For this, bare metals or virtual machines to support such environments used to be the standard mode of operation. However, this scenario is a costly endeavor, in which development teams have insufficient environments to work efficiently (impacting the time-to-market of a feature). This is where containers come in.

Containers are segregated environments to run microservices in isolation from one another. They are lightweight environments, that are faster to spin up and more resource-efficient than VMs (one VM can host multiple containers). Their lightweight nature also makes containers less robust, causing them to crash more often than VMs. Although that might seem like a disadvantage, this makes them a perfect environment for a software engineer needing to build a robust software application. After all, it is highly likely as well that a resource will crash in production from time to time. Identifying the implications of such a failure in the development and testing phase of a product allows software engineers to create contingencies in the software that prevent a negative impact on performance.

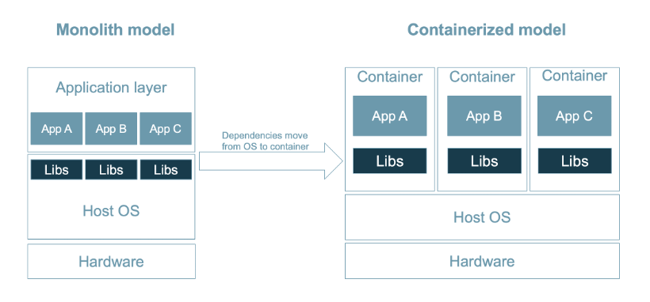

Another advantage of containers is that the software libraries that are traditionally offered by a host OS are now being offered by the container itself. Consequently, microservices that run in containers are no longer affected by updates in the host OS, resulting in the independence of a microservice regardless of the environment it is run in (as long as it is containerized).

Moreover, in a world that is reaping the benefits of the cloud, we see that most organizations have a cloud strategy that is hybrid or multi-cloud. Containerized software is easy to port across these different environments.

In short, there are multiple benefits to the use of containers for organizations:

- Enabled iterative software development and process optimization by supporting the microservice model.

- Reduced time-to-market by fast delivery of environments for developing and testing features.

- Improved cost efficiency by more efficient utilization of resources.

- Reduced dependency of microservices on underlying platforms.

With so many benefits, you might wonder why the entire world is not running on containers yet. One reason is that it takes effort (time and money) to refactor an existing application in such a way that its components will fit in containers. And although there are multiple benefits to containers, these benefits are not required for ALL applications. In the end, it is all about the business case. Another reason is that containers need to be managed, orchestrated.

The need for container orchestration platforms

There are some platform issues that containers do not solve — such as scalability, break-fix, connectivity. A container in itself does not solve the requirement of scalability. When business demand is dynamic, your applications need to be able to deal with sudden increases and/or decreases in resource consumption. For this, you still need to scale the number of containers accordingly (a task that is not solved by a container itself). And in the event that a container fails, you need to spin up a new one in order to maintain cluster integrity. And when your container fails, it is important to keep its failure isolated to that single container. Connectivity also is a topic. Since containers have dynamic IP addresses, you need to keep updating their networking destinations if you want containers to communicate with each other. After all, containers are isolated from one another by design.

So even though containers have multiple benefits, they contain little intelligence in terms of integration and operations. Luckily, these issues can be solved by using a container orchestration platform, such as Kubernetes.

Given the above and as container landscapes grow in size and complexity, it is clear that container orchestration platforms are needed. These platforms offer us the means to manage containerized environments through sets of parameters. For example: what should a pod (container cluster) look like if it is to perform well? Which pods are allowed to talk to each other? When do I scale up? What type of resources do I want my container landscape to use?

The choices you make when configuring a container platform have a direct effect on application performance, cost efficiency, and security. In other words, the benefits offered by such a platform are not automatically optimized. From an abstract level, one could therefore argue that operations teams still have to deal with the same problems as they had to in the ‘old world’, even if the underlying technology has changed.

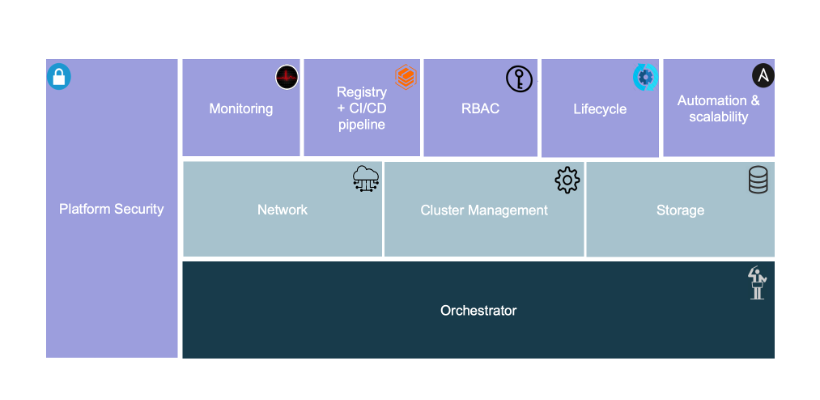

See the figure below. For example, one still has to:

- Define optimal cluster definitions, including routing decisions.

- Provide the resources to be used by containers (manually or through infra-as-code).

- Set up a central monitoring environment to gather data on infrastructure, containers, and microservices.

- Manage the life cycle of both containers and microservices.

- Configure (role-based) access control across users.

At Itility, we use our standard mode of operation to manage all these factors: through data and code. A simple example of this is to automatically schedule the uptime of pods (e.g., outside office hours) based on the application utilization patterns. This can greatly reduce cluster costs.

Getting started with container technology

If you do decide to build a container landscape, including an orchestration functionality, know that some key decisions need to be made regarding your platform.

One decision to make is: will you use an open-source platform or a vendor-supported distribution? The market offers a large variety of products that can be selected to build, configure, and manage container landscapes. These can be either open-source products or licensed distributions (including vendor support). These products can include either only specific solutions (e.g., Helm as a tool for smooth installations and upgrades) or very elaborate solutions (e.g., OpenShift, which includes Kubernetes, Docker, Ceph, Helm, and many more tools).

Our advice is to use an open-source distribution for your organization, unless:

- You need specific development-accelerating features on top of Kubernetes that are in the portfolio (or on the roadmap) of a specific vendor.

- A vendor has a proven track record in providing solutions for use cases similar to yours.

- You want to simplify lifecycle management (as compatibility of underlying tools would no longer be one of your worries).

- You need a fast hotfix procedure in case of bugs in the container platform software (software assurance).

As you can see, we do NOT think that license cost in itself should be considered a hurdle. We would rather focus on the value that a vendor-distributed version brings. License costs should be evaluated in the context of the long-term cost of ownership (e.g., five years), including maintenance, support, and development of the product of your choice. Given that these costs might be reduced with a vendor-distributed version, the total costs of such a product might very well be lower than an open-source distribution.

Another decision to make is: will you run the platform in the cloud or on-premises? We believe that the question of whether to set up a container platform in the public cloud should be answered as part of your organization’s cloud strategy. If this strategy is not there yet, containers can be an accelerator to start a cloud migration. Each major provider also offers standard catalog items to deploy and run containers and container orchestration platforms. And the speed and scalability options of the public cloud can offer advantages for your software development teams (and others). This is why we think the cloud is the superior choice.

And the third decision to make is: who is going to build and manage your platform? Your own team, a trusted partner, or a new vendor that is specialized in containers? To build and manage a container platform, one needs hands-on experience with the tools and topics we have mentioned so far. Yet, it is of (at least) equal importance that the responsible team understands the system of which the container platform is a part. After all, containers are just a means to an end.

To get the most value out of a container platform, one should understand how it interfaces with the rest of the IT/OT landscape and how it can contribute to successful products and services. In the end, no one benefits from an underperforming application. If the container platform performs well but is integrated poorly with the rest of the environment, the result is what counts.

Therefore, we recommend building container competence yourself. If you want to speed things up, you should work with a trusted partner that is experienced in this technology. A system perspective in which containers are just one component is paramount.

Conclusion

Container technology can be a strong accelerator for both IT and OT departments. Setting up a proper container landscape is no small feat. Similar to traditional IT environments, container landscapes require orchestration to control application performance, cost, and security. There are many products available in the market that can help you reach that control, but the best fit for a specific use case can only be determined by evaluating multiple angles. What matters most is that container platforms are no standalone solution: they are just a means to an end.

At Itility, we help our customers with building, running, and optimizing container platforms. Our engineers collaborate with development teams that use these platforms and therefore we understand both the administrator and the developer perspective on containers.

This is how we help our customers get the most value out of their development landscapes. Curious? Get in touch!